Chapter 8 Regression

8.1 Videos

While I had intended on producing my own videos for this chapter, the existing videos I found were just too good.

Video: Correlation Does’t Equal Causation - 13 min Quick note about this video: This one and the one on regression are excellent. However, I was surprised to see Karl Pearson’s work on the heights of fathers and sons included without editorial comment. The reason why Pearson was studying this problem is worth discussing. Oddly, I found an excellent video describing this from another channel from the same organization. Pearson isn’t discussed specifically, but both Pearson and Galton were British mathematicians who developed many of techniques we are using today (see their Wikipedia pages for a list). Therefore, their legacy lives on in our work, and you’ll find connections between modern psychology and these two in this video: Video: Eugenics and Francis Galton - 13 min

Video: Regression - 13 min. This is an excellent introduction to GLM and regression. Worth watching more than once.

Because it’s longer and lower quality than the videos above, you don’t need to watch the following video. But I am including it here to provide as many resources as possible.

8.2 Introduction

The goal in this chapter is to introduce linear regression, the standard tool that statisticians rely on when analysing the relationship between interval scale predictors and interval scale outcomes. Stripped to its bare essentials, linear regression models are basically a slightly fancier version of the Pearson correlation (Section 8.4) though as we’ll see, regression models are much more powerful tools.

8.3 The General Linear Model (GLM)

Text by David Schuster

We have not even done \(t\)-tests in R yet and we are already doing multiple regression! This is intentional. One theme of this course is that statistical concepts that seem to be independent are sometimes mathematically related. Such is the case with regression. Regression is the mathematical basis of most of the inferential statistical procedures we use, including \(t\)-tests, \(F\)-tests (including all variations of analysis of variance, also called ANOVA), and, of course, correlation and multiple regression. Besides introducing linear regression, which is powerful and versatile, this chapter will introduce the mathematical concept that underlies all of these procedures, the general linear model (GLM). As an example of GLM, we will see that linear regression is perhaps the more powerful and versatile technique we will discuss in this class, because everything that follows can be implemented as a GLM model.

The key concept of the GLM is that it measures a linear relationship between one or more outcome variables (in our research they will usually be the dependent variable) and one or more predictor variables (usually independent variables). GLM models that have a single outcome variable are univariate statistics. GLM modeling with a single predictor is called simple regression. In this chapter, we will discover that we can add unlimited number of predictors in a linear model (although we will discover that there are situations where we can have too many variables), which is what defines multiple regression (it contains multiple independent variables). Our course will focus on univariate statistics. You should be aware, however, that a more complex version exists; we can combine multiple dependent variables on the left side of the equation. Including more than one outcome variable in a model is called multivariate statistics.

8.3.1 The Traditional approach: Two kinds of parametric statistical tests

Statistics teachers and textbook authors sometimes de-emphasize GLM in favor of presenting statistical tests as being one of two types:

Statistical tests that measure linear relationships (e.g., correlation)

Statistical tests that measure differences between groups (e.g., z-test)

This characterization can be useful, as researchers find themselves wanting to measure a linear relationship (i.e., Is there a correlation between A and B?) or measure differences between groups (i.e., Is there a difference between Condition A and Condition B?). Consequently, linear relationships are useful when researchers are doing observational research. You can measure two quantitative variables and see if they are related. Meanwhile, experimental researchers want to see if their discrete manipulation (participants are either in Condition A or Condition B; it is a discrete IV) causes a change in their dependent variable. This makes multiple regression more convenient for researchers because they have continuous variables. Meanwhile, experimental researchers often have a discrete IV, so software designed to run an ANOVA can make this analysis more convenient than adapting it to run as a multiple regression. It is important to note that this is merely convenience. Both procedures use GLM.

This distinction between linear relationships and group differences can be misleading when researchers think that these two techniques are separate. Any t-test or ANOVA could be run as a multiple regression. Or, worse, researchers may mix up the research design and the statistical analysis. Therefore, it is important to state:

Regression can be used with continuous or discrete predictor variables. There is a rule about this; discrete predictors in a regression must be dichotomous (only having two possible values). A yes/no question could be added to a regression model. A question asking participants their favorite color could not be added to a regression model without an additional step. Non-dichotomous, discrete predictors are added to regression models using a technique called dummy coding, which turns a categorical variable into a series of dichotomous variables (we will learn how to do this later on). Dummy coding would lead researchers to the same answer as running an ANOVA.

ANOVA is designed for discrete predictor variables (with as many categories or levels as you would like). ANOVA can be used with continuous predictor variables if we make them discrete, but that is rarely advisable. One way to do this is a median split (scores below the median are 0 and scores above the median are 1). If one participant scores just below the median and another very far below the median, they will both be scored the same. Therefore, this throws away variance and can reduce statistical power. For this reason, multiple regression is the more adaptable technique. That said, we will see that we use some ANOVA calculations on our regression models when we do null hypothesis significance testing (NHST).

All GLM procedures can be used with non-experimental, quasi-experimental, or experimental research designs. Whether inferences can be made about causality is affected by the research design, not the choice of statistical technique. Both regression and ANOVA can show us if two variables are related. Thus, which one is the right statistic depends on the type of measurement used in the study, not whether the study is an experiment, quasi-experiment, or non-experiment. If you have two continuous variables, you should use a correlation. If you have a discrete IV and a continuous DV, you could use either correlation or a t-test. You can use a correlation to analyze experiments, quasi-experiments, or non-experiments. You can use a t-test to analyze experiments, quasi-experiments, or non-experiments. A correlation analysis is not the same thing as a “correlational research design.” For this reason, “experiment, quasi-experiment, and non-experiment” are much clearer labels.

The same model and data tested as a regression or as an ANOVA will yield the same mean differences, effect size, and p-value. Regression and ANOVA, because they are both GLM, have the same statistical power.

All GLM procedures are parametric statistics, which means that they make assumptions about the population distributions from which we sample. In practice, this means that parametric statistics typically have more assumptions than their nonparametric alternatives. We need to learn which assumptions are important and how we can check our data to see if assumptions are met. It is an oversimplification to say that nonparametric stats have no assumptions; they just have fewer assumptions.

8.3.2 The GLM approach

\[ Y = BX + I + E \] With GLM, we are using the equation for a line to assess and describe the relationship between variables. You will see this equation several times in this chapter with different letters labeling each element. The elements are:

\(Y\), an outcome variable, which can also be called the regressand, response variable, predicted variable, or output variable

\(X\), a predictor variable, which can also be called the regressor, independent variable, treatment variable, or factor

\(B\), a model parameter, also called the weight, slope or the coefficient, which defines the relationship between \(X\) and \(Y\)

\(I\), the intercept, which gives the estimated value of Y when \(X = 0\). It is also the y-intercept of the regression line.

\(E\), error, also called the residual

The techniques in this chapter expand upon this approach of creating a model and using it to estimate an outcome variable. For example, each of these elements can actually be a list (a matrix) of observations. Further, we can expand this model such that \(XB\) is a combination of several predictor variables, not just one. Or, we could create an even simpler model with no predictors.

It might help to illustrate this with an example. In Kozma and Stones (1983) found that good health predicted happiness. Imagine that I wanted to predict your score on this measure right now. However, I know nothing about you. What could serve as my best guess of your happiness? I could use the population mean. That would result in a model \(Y = I + E\), where Y is your predicted happiness, predicted on the basis of the population mean (\(I = \mu)\) and nothing else. As there are no variables, there would be no \(XB\) term. I could probably do a bit better if I also knew your health score. This new model would be \(Y = XB + I + E\), where \(X\) is your health score, and \(B\) is a model parameter that explains how to adjust your predicted happiness score based on the health score (technically, it says to increase the predicted \(Y\) by \(B\) units for every 1 unit increase of \(X\)). We have just created a linear regression model, which is a use of the GLM. We have also shown how the addition of predictors (assuming they are good predictors that independently correlate with our outcome variable) can increase the predictive power of a model. This pattern could continue if we added an additional explanatory variable, leading to a multiple regression equation in the form \(Y = X_1B_1 + X_2B_2 + I +E\).

8.4 Correlations

Up to this point we have focused entirely on how to construct descriptive statistics for a single variable. What we haven’t done is talked about how to describe the relationships between variables in the data. To do that, we want to talk mostly about the correlation between variables. But first, we need some data.

8.4.1 The data

After spending so much time looking at the AFL data, I’m starting to get bored with sports. Instead, let’s turn to a topic close to every parent’s heart: sleep. The following data set is fictitious, but based on real events. Suppose I’m curious to find out how much my infant son’s sleeping habits affect my mood. Let’s say that I can rate my grumpiness very precisely, on a scale from 0 (not at all grumpy) to 100 (grumpy as a very, very grumpy old man). And, lets also assume that I’ve been measuring my grumpiness, my sleeping patterns and my son’s sleeping patterns for quite some time now. Let’s say, for 100 days. And, being a nerd, I’ve saved the data as a file called parenthood.Rdata. If we load the data…

load( "./data/parenthood.Rdata" )

who(TRUE)## -- Name -- -- Class -- -- Size --

## parenthood data.frame 100 x 4

## $dan.sleep numeric 100

## $baby.sleep numeric 100

## $dan.grump numeric 100

## $day integer 100… we see that the file contains a single data frame called parenthood, which contains four variables dan.sleep, baby.sleep, dan.grump and day. If we peek at the data using head() out the data, here’s what we get:

head(parenthood,10)## dan.sleep baby.sleep dan.grump day

## 1 7.59 10.18 56 1

## 2 7.91 11.66 60 2

## 3 5.14 7.92 82 3

## 4 7.71 9.61 55 4

## 5 6.68 9.75 67 5

## 6 5.99 5.04 72 6

## 7 8.19 10.45 53 7

## 8 7.19 8.27 60 8

## 9 7.40 6.06 60 9

## 10 6.58 7.09 71 10Next, I’ll calculate some basic descriptive statistics:

describe( parenthood )## vars n mean sd median trimmed mad min max range

## dan.sleep 1 100 6.97 1.02 7.03 7.00 1.09 4.84 9.00 4.16

## baby.sleep 2 100 8.05 2.07 7.95 8.05 2.33 3.25 12.07 8.82

## dan.grump 3 100 63.71 10.05 62.00 63.16 9.64 41.00 91.00 50.00

## day 4 100 50.50 29.01 50.50 50.50 37.06 1.00 100.00 99.00

## skew kurtosis se

## dan.sleep -0.29 -0.72 0.10

## baby.sleep -0.02 -0.69 0.21

## dan.grump 0.43 -0.16 1.00

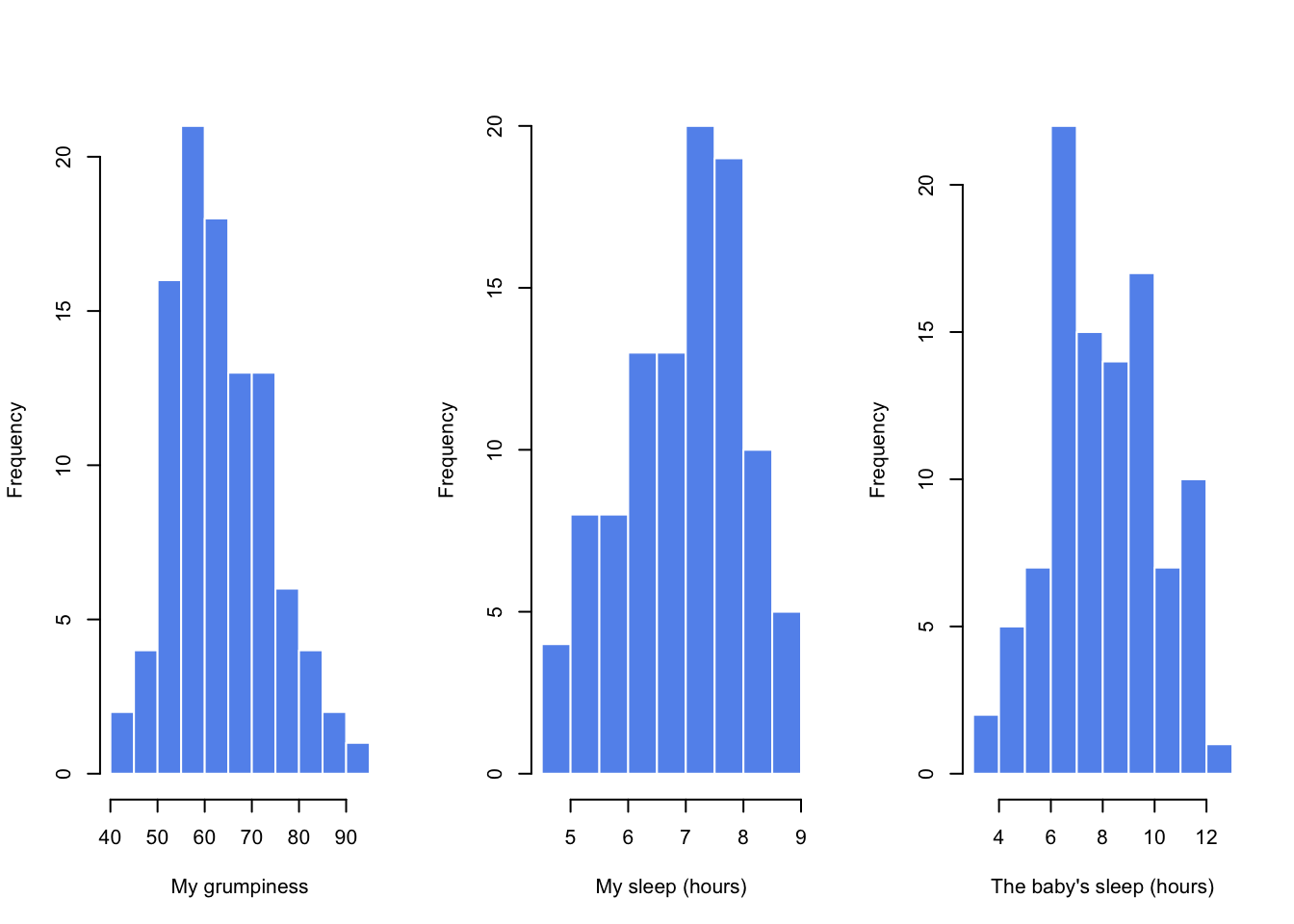

## day 0.00 -1.24 2.90Finally, to give a graphical depiction of what each of the three interesting variables looks like, Figure 8.1 plots histograms.

Figure 8.1: Histograms for the three interesting variables in the parenthood data set

One thing to note: just because R can calculate dozens of different statistics doesn’t mean you should report all of them. If I were writing this up for a report, I’d probably pick out those statistics that are of most interest to me (and to my readership), and then put them into a nice, simple table like the one in Table 8.1.111 Notice that when I put it into a table, I gave everything “human readable” names. This is always good practice. Notice also that I’m not getting enough sleep. This isn’t good practice, but other parents tell me that it’s standard practice.

| variable | min | max | mean | median | std. dev | IQR |

|---|---|---|---|---|---|---|

| Dan’s grumpiness | 41 | 91 | 63.71 | 62 | 10.05 | 14 |

| Dan’s hours slept | 4.84 | 9 | 6.97 | 7.03 | 1.02 | 1.45 |

| Dan’s son’s hours slept | 3.25 | 12.07 | 8.05 | 7.95 | 2.07 | 3.21 |

8.4.2 The strength and direction of a relationship

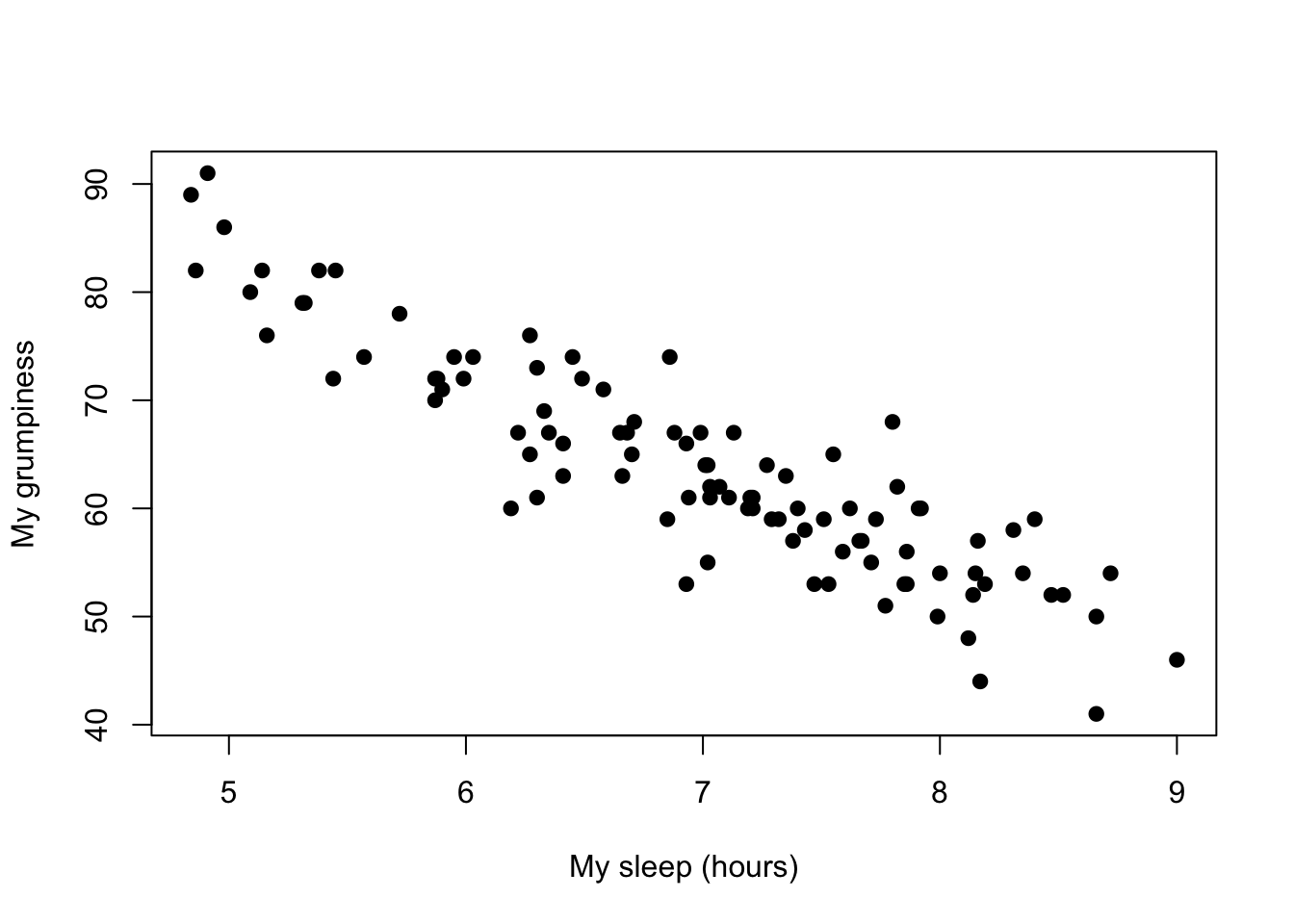

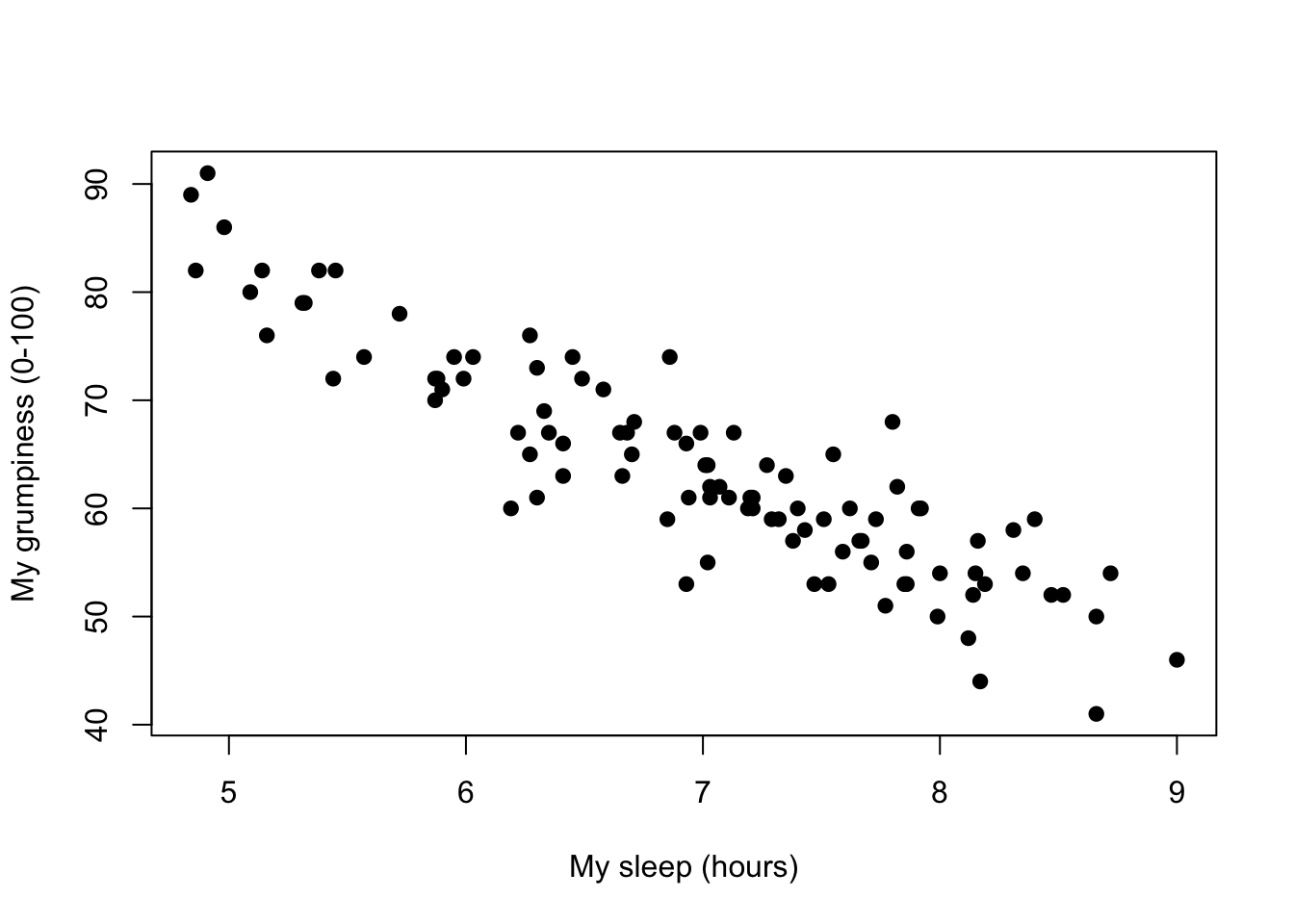

Figure 8.2: Scatterplot showing the relationship between dan.sleep and dan.grump

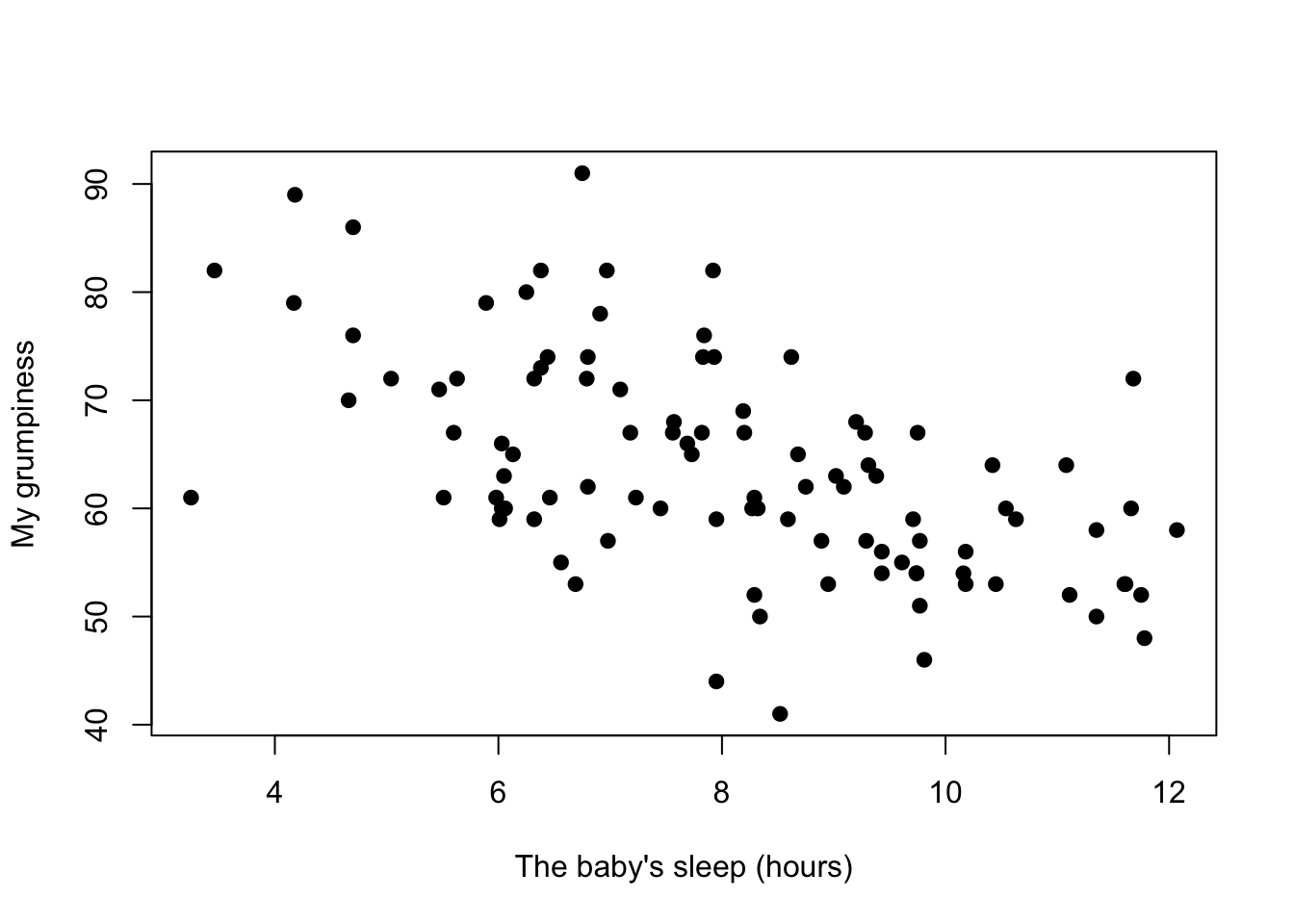

Figure 8.3: Scatterplot showing the relationship between baby.sleep and dan.grump

We can draw scatterplots to give us a general sense of how closely related two variables are. Ideally though, we might want to say a bit more about it than that. For instance, let’s compare the relationship between dan.sleep and dan.grump (Figure 8.2 with that between baby.sleep and dan.grump (Figure 8.3. When looking at these two plots side by side, it’s clear that the relationship is qualitatively the same in both cases: more sleep equals less grump! However, it’s also pretty obvious that the relationship between dan.sleep and dan.grump is stronger than the relationship between baby.sleep and dan.grump. The plot on the left is “neater” than the one on the right. What it feels like is that if you want to predict what my mood is, it’d help you a little bit to know how many hours my son slept, but it’d be more helpful to know how many hours I slept.

In contrast, let’s consider Figure 8.3 vs. Figure 8.4. If we compare the scatterplot of “baby.sleep v dan.grump” to the scatterplot of “`baby.sleep v dan.sleep,” the overall strength of the relationship is the same, but the direction is different. That is, if my son sleeps more, I get more sleep (positive relationship, but if he sleeps more then I get less grumpy (negative relationship).

Figure 8.4: Scatterplot showing the relationship between baby.sleep and dan.sleep

8.4.3 The correlation coefficient

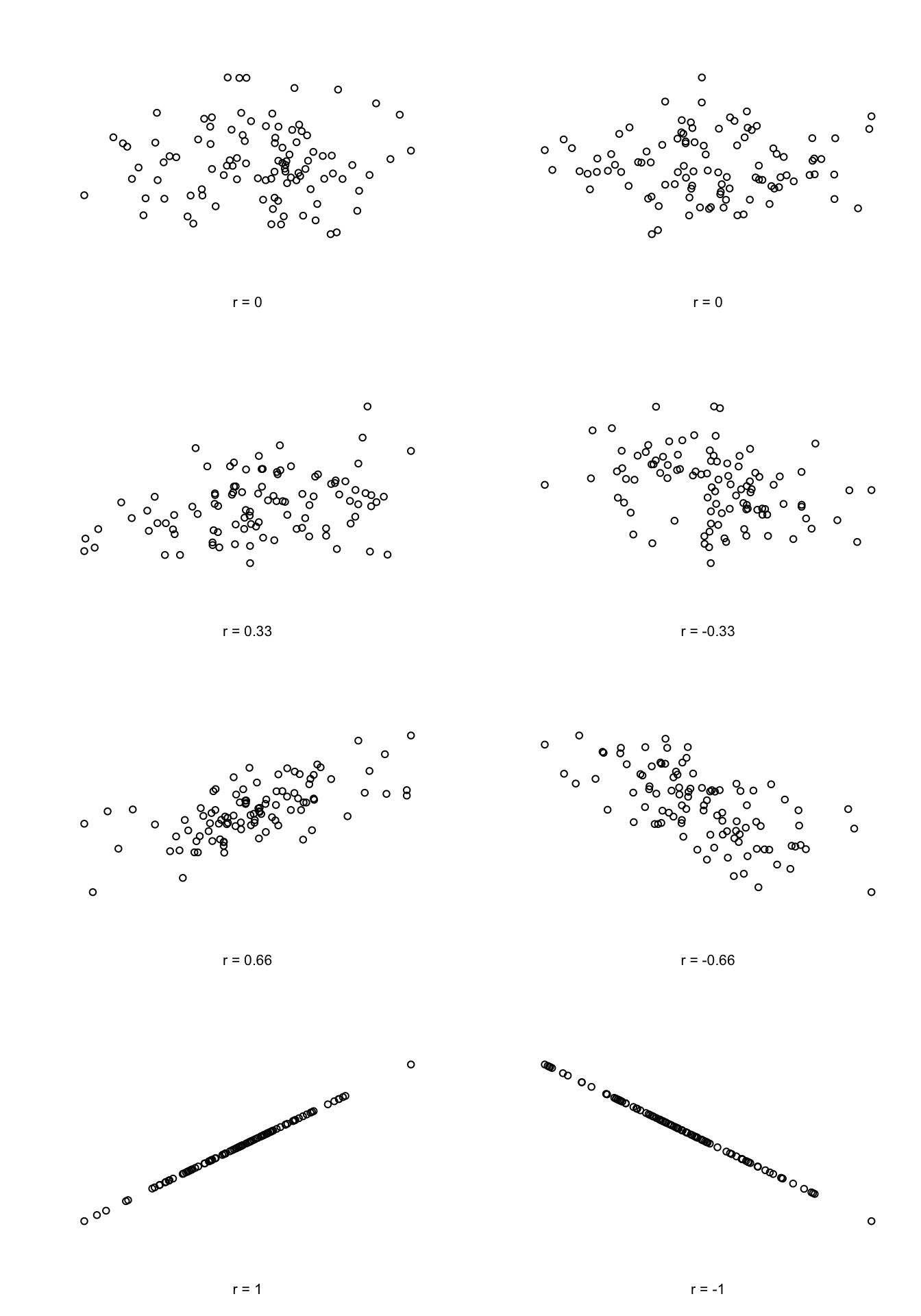

We can make these ideas a bit more explicit by introducing the idea of a correlation coefficient (or, more specifically, Pearson’s correlation coefficient), which is traditionally denoted by \(r\). The correlation coefficient between two variables \(X\) and \(Y\) (sometimes denoted \(r_{XY}\)), which we’ll define more precisely in the next section, is a measure that varies from \(-1\) to \(1\). When \(r = -1\) it means that we have a perfect negative relationship, and when \(r = 1\) it means we have a perfect positive relationship. When \(r = 0\), there’s no relationship at all. If you look at Figure 8.5, you can see several plots showing what different correlations look like.

Figure 8.5: Illustration of the effect of varying the strength and direction of a correlation

The formula for the Pearson’s correlation coefficient can be written in several different ways. I think the simplest way to write down the formula is to break it into two steps. Firstly, let’s introduce the idea of a covariance. The covariance between two variables \(X\) and \(Y\) is a generalisation of the notion of the variance; it’s a mathematically simple way of describing the relationship between two variables that isn’t terribly informative to humans:

\[

\mbox{Cov}(X,Y) = \frac{1}{N-1} \sum_{i=1}^N \left( X_i - \bar{X} \right) \left( Y_i - \bar{Y} \right)

\]

Because we’re multiplying (i.e., taking the “product” of) a quantity that depends on \(X\) by a quantity that depends on \(Y\) and then averaging112, you can think of the formula for the covariance as an “average cross product” between \(X\) and \(Y\). The covariance has the nice property that, if \(X\) and \(Y\) are entirely unrelated, then the covariance is exactly zero. If the relationship between them is positive (in the sense shown in Figure@reffig:corr) then the covariance is also positive; and if the relationship is negative then the covariance is also negative. In other words, the covariance captures the basic qualitative idea of correlation. Unfortunately, the raw magnitude of the covariance isn’t easy to interpret: it depends on the units in which \(X\) and \(Y\) are expressed, and worse yet, the actual units that the covariance itself is expressed in are really weird. For instance, if \(X\) refers to the dan.sleep variable (units: hours) and \(Y\) refers to the dan.grump variable (units: grumps), then the units for their covariance are “hours \(\times\) grumps.” And I have no freaking idea what that would even mean.

The Pearson correlation coefficient \(r\) fixes this interpretation problem by standardising the covariance, in pretty much the exact same way that the \(z\)-score standardises a raw score: by dividing by the standard deviation. However, because we have two variables that contribute to the covariance, the standardisation only works if we divide by both standard deviations.113 In other words, the correlation between \(X\) and \(Y\) can be written as follows: \[ r_{XY} = \frac{\mbox{Cov}(X,Y)}{ \hat{\sigma}_X \ \hat{\sigma}_Y} \] By doing this standardisation, not only do we keep all of the nice properties of the covariance discussed earlier, but the actual values of \(r\) are on a meaningful scale: \(r= 1\) implies a perfect positive relationship, and \(r = -1\) implies a perfect negative relationship. I’ll expand a little more on this point later, in Section@refsec:interpretingcorrelations. But before I do, let’s look at how to calculate correlations in R.

8.4.4 Calculating correlations in R

Calculating correlations in R can be done using the cor() command. The simplest way to use the command is to specify two input arguments x and y, each one corresponding to one of the variables. The following extract illustrates the basic usage of the function:114

cor( x = parenthood$dan.sleep, y = parenthood$dan.grump )## [1] -0.903384However, the cor() function is a bit more powerful than this simple example suggests. For example, you can also calculate a complete “correlation matrix,” between all pairs of variables in the data frame:115

# correlate all pairs of variables in "parenthood":

cor( x = parenthood ) ## dan.sleep baby.sleep dan.grump day

## dan.sleep 1.00000000 0.62794934 -0.90338404 -0.09840768

## baby.sleep 0.62794934 1.00000000 -0.56596373 -0.01043394

## dan.grump -0.90338404 -0.56596373 1.00000000 0.07647926

## day -0.09840768 -0.01043394 0.07647926 1.000000008.4.5 Interpreting a correlation

Naturally, in real life you don’t see many correlations of 1. So how should you interpret a correlation of, say \(r= .4\)? The honest answer is that it really depends on what you want to use the data for, and on how strong the correlations in your field tend to be. A friend of mine in engineering once argued that any correlation less than \(.95\) is completely useless (I think he was exaggerating, even for engineering). On the other hand there are real cases – even in psychology – where you should really expect correlations that strong. For instance, one of the benchmark data sets used to test theories of how people judge similarities is so clean that any theory that can’t achieve a correlation of at least \(.9\) really isn’t deemed to be successful. However, when looking for (say) elementary correlates of intelligence (e.g., inspection time, response time), if you get a correlation above \(.3\) you’re doing very very well. In short, the interpretation of a correlation depends a lot on the context. That said, the rough guide in Table 8.2 is pretty typical.

| Correlation | Strength | Direction |

|---|---|---|

| -1.0 to -0.9 | Very strong | Negative |

| -0.9 to -0.7 | Strong | Negative |

| -0.7 to -0.4 | Moderate | Negative |

| -0.4 to -0.2 | Weak | Negative |

| -0.2 to 0 | Negligible | Negative |

| 0 to 0.2 | Negligible | Positive |

| 0.2 to 0.4 | Weak | Positive |

| 0.4 to 0.7 | Moderate | Positive |

| 0.7 to 0.9 | Strong | Positive |

| 0.9 to 1.0 | Very strong | Positive |

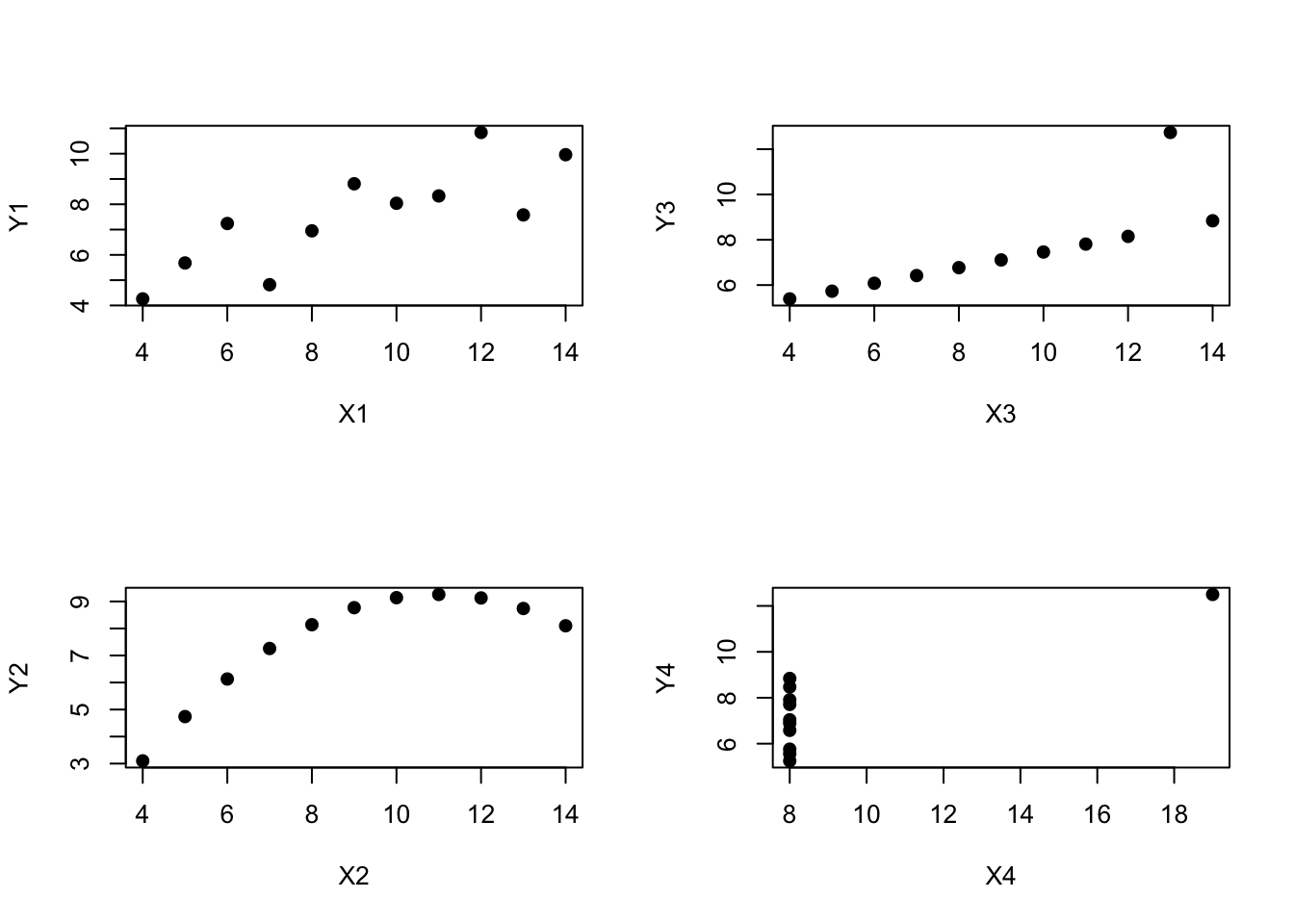

However, something that can never be stressed enough is that you should always look at the scatterplot before attaching any interpretation to the data. A correlation might not mean what you think it means. The classic illustration of this is “Anscombe’s Quartet” (Anscombe 1973), which is a collection of four data sets. Each data set has two variables, an \(X\) and a \(Y\). For all four data sets the mean value for \(X\) is 9 and the mean for \(Y\) is 7.5. The, standard deviations for all \(X\) variables are almost identical, as are those for the the \(Y\) variables. And in each case the correlation between \(X\) and \(Y\) is \(r = 0.816\). You can verify this yourself, since the dataset comes distributed with R. The commands would be:

cor( anscombe$x1, anscombe$y1 )## [1] 0.8164205cor( anscombe$x2, anscombe$y2 )## [1] 0.8162365and so on.

You’d think that these four data sets would look pretty similar to one another. They do not. If we draw scatterplots of \(X\) against \(Y\) for all four variables, as shown in Figure 8.6 we see that all four of these are spectacularly different to each other.

Figure 8.6: Anscombe’s quartet. All four of these data sets have a Pearson correlation of \(r = .816\), but they are qualitatively different from one another.

The lesson here, which so very many people seem to forget in real life is “always graph your raw data.” This will be the focus of Chapter 3.9.

8.4.6 Spearman’s rank correlations

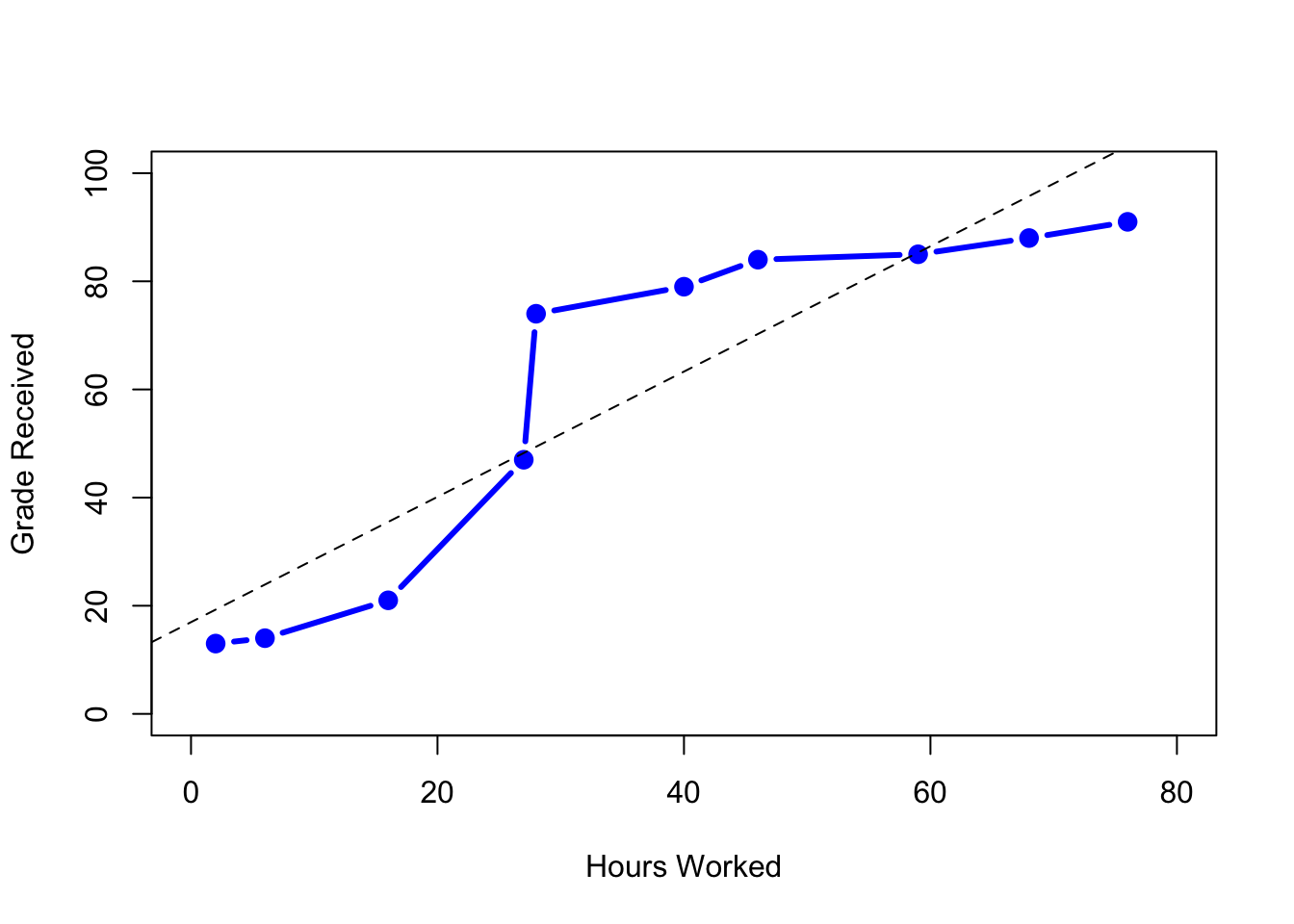

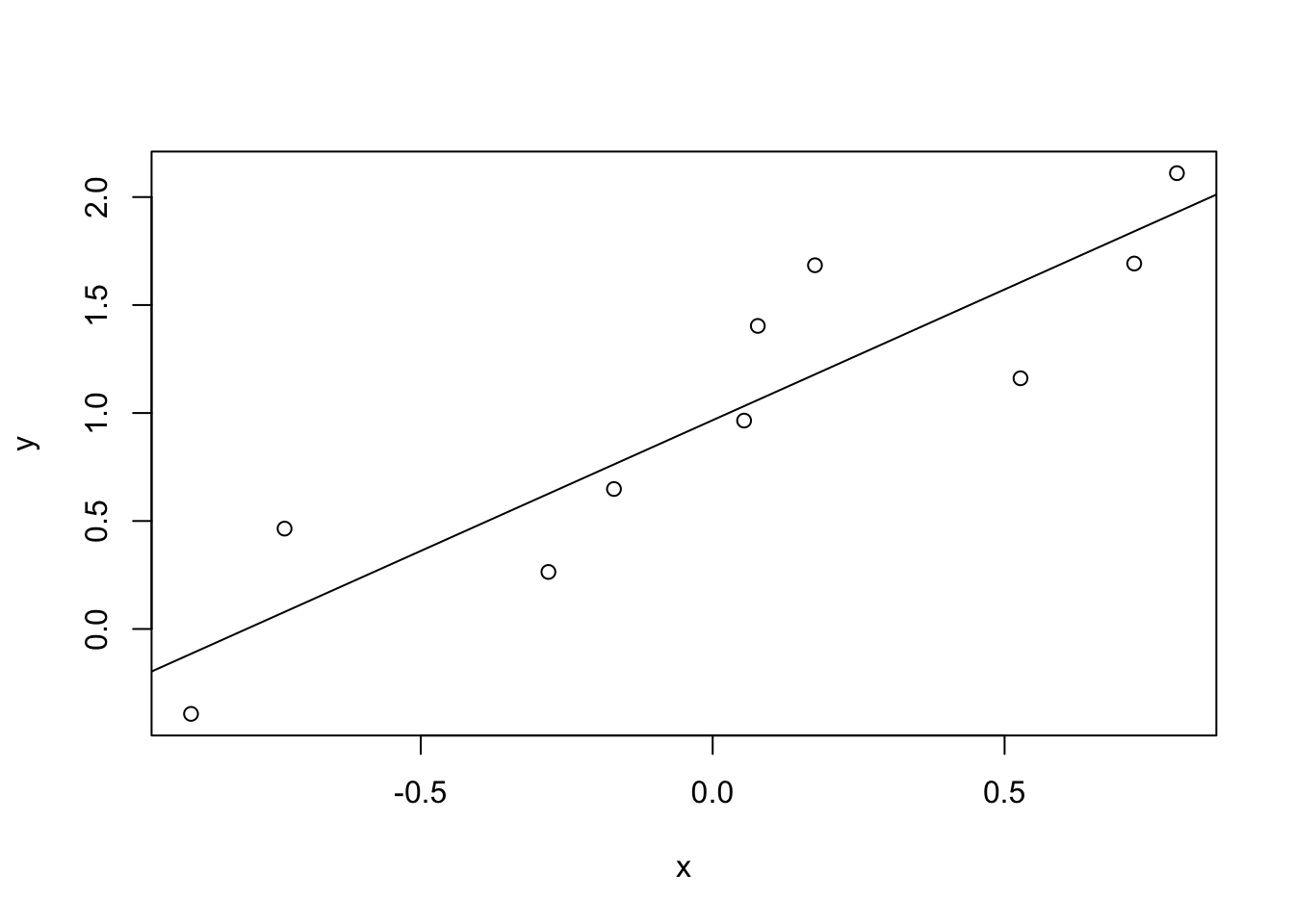

Figure 8.7: The relationship between hours worked and grade received, for a toy data set consisting of only 10 students (each circle corresponds to one student). The dashed line through the middle shows the linear relationship between the two variables. This produces a strong Pearson correlation of \(r = .91\). However, the interesting thing to note here is that there’s actually a perfect monotonic relationship between the two variables: in this toy example at least, increasing the hours worked always increases the grade received, as illustrated by the solid line. This is reflected in a Spearman correlation of \(rho = 1\). With such a small data set, however, it’s an open question as to which version better describes the actual relationship involved.

The Pearson correlation coefficient is useful for a lot of things, but it does have shortcomings. One issue in particular stands out: what it actually measures is the strength of the linear relationship between two variables. In other words, what it gives you is a measure of the extent to which the data all tend to fall on a single, perfectly straight line. Often, this is a pretty good approximation to what we mean when we say “relationship,” and so the Pearson correlation is a good thing to calculation. Sometimes, it isn’t.

One very common situation where the Pearson correlation isn’t quite the right thing to use arises when an increase in one variable \(X\) really is reflected in an increase in another variable \(Y\), but the nature of the relationship isn’t necessarily linear. An example of this might be the relationship between effort and reward when studying for an exam. If you put in zero effort (\(X\)) into learning a subject, then you should expect a grade of 0% (\(Y\)). However, a little bit of effort will cause a massive improvement: just turning up to lectures means that you learn a fair bit, and if you just turn up to classes, and scribble a few things down so your grade might rise to 35%, all without a lot of effort. However, you just don’t get the same effect at the other end of the scale. As everyone knows, it takes a lot more effort to get a grade of 90% than it takes to get a grade of 55%. What this means is that, if I’ve got data looking at study effort and grades, there’s a pretty good chance that Pearson correlations will be misleading.

To illustrate, consider the data plotted in Figure 8.7, showing the relationship between hours worked and grade received for 10 students taking some class. The curious thing about this – highly fictitious – data set is that increasing your effort always increases your grade. It might be by a lot or it might be by a little, but increasing effort will never decrease your grade. The data are stored in effort.Rdata:

> load( "effort.Rdata" )

> who(TRUE)

-- Name -- -- Class -- -- Size --

effort data.frame 10 x 2

$hours numeric 10

$grade numeric 10 The raw data look like this:

> effort

hours grade

1 2 13

2 76 91

3 40 79

4 6 14

5 16 21

6 28 74

7 27 47

8 59 85

9 46 84

10 68 88If we run a standard Pearson correlation, it shows a strong relationship between hours worked and grade received,

> cor( effort$hours, effort$grade )

[1] 0.909402but this doesn’t actually capture the observation that increasing hours worked always increases the grade. There’s a sense here in which we want to be able to say that the correlation is perfect but for a somewhat different notion of what a “relationship” is. What we’re looking for is something that captures the fact that there is a perfect ordinal relationship here. That is, if student 1 works more hours than student 2, then we can guarantee that student 1 will get the better grade. That’s not what a correlation of \(r = .91\) says at all.

How should we address this? Actually, it’s really easy: if we’re looking for ordinal relationships, all we have to do is treat the data as if it were ordinal scale! So, instead of measuring effort in terms of “hours worked,” lets rank all 10 of our students in order of hours worked. That is, student 1 did the least work out of anyone (2 hours) so they get the lowest rank (rank = 1). Student 4 was the next laziest, putting in only 6 hours of work in over the whole semester, so they get the next lowest rank (rank = 2). Notice that I’m using “rank =1” to mean “low rank.” Sometimes in everyday language we talk about “rank = 1” to mean “top rank” rather than “bottom rank.” So be careful: you can rank “from smallest value to largest value” (i.e., small equals rank 1) or you can rank “from largest value to smallest value” (i.e., large equals rank 1). In this case, I’m ranking from smallest to largest, because that’s the default way that R does it. But in real life, it’s really easy to forget which way you set things up, so you have to put a bit of effort into remembering!

Okay, so let’s have a look at our students when we rank them from worst to best in terms of effort and reward:

| rank (hours worked) | rank (grade received) | |

|---|---|---|

| student | 1 | 1 |

| student | 2 | 10 |

| student | 3 | 6 |

| student | 4 | 2 |

| student | 5 | 3 |

| student | 6 | 5 |

| student | 7 | 4 |

| student | 8 | 8 |

| student | 9 | 7 |

| student | 10 | 9 |

Hm. These are identical. The student who put in the most effort got the best grade, the student with the least effort got the worst grade, etc. We can get R to construct these rankings using the rank() function, like this:

> hours.rank <- rank( effort$hours ) # rank students by hours worked

> grade.rank <- rank( effort$grade ) # rank students by grade receivedAs the table above shows, these two rankings are identical, so if we now correlate them we get a perfect relationship:

> cor( hours.rank, grade.rank )

[1] 1What we’ve just re-invented is Spearman’s rank order correlation, usually denoted \(\rho\) to distinguish it from the Pearson correlation \(r\). We can calculate Spearman’s \(\rho\) using R in two different ways. Firstly we could do it the way I just showed, using the rank() function to construct the rankings, and then calculate the Pearson correlation on these ranks. However, that’s way too much effort to do every time. It’s much easier to just specify the method argument of the cor() function.

> cor( effort$hours, effort$grade, method = "spearman")

[1] 1The default value of the method argument is "pearson", which is why we didn’t have to specify it earlier on when we were doing Pearson correlations.

8.4.7 The correlate() function

As we’ve seen, the cor() function works pretty well, and handles many of the situations that you might be interested in. One thing that many beginners find frustrating, however, is the fact that it’s not built to handle non-numeric variables. From a statistical perspective, this is perfectly sensible: Pearson and Spearman correlations are only designed to work for numeric variables, so the cor() function spits out an error.

Here’s what I mean. Suppose you were keeping track of how many hours you worked in any given day, and counted how many tasks you completed. If you were doing the tasks for money, you might also want to keep track of how much pay you got for each job. It would also be sensible to keep track of the weekday on which you actually did the work: most of us don’t work as much on Saturdays or Sundays. If you did this for 7 weeks, you might end up with a data set that looks like this one:

> load("work.Rdata")

> who(TRUE)

-- Name -- -- Class -- -- Size --

work data.frame 49 x 7

$hours numeric 49

$tasks numeric 49

$pay numeric 49

$day integer 49

$weekday factor 49

$week numeric 49

$day.type factor 49

> head(work)

hours tasks pay day weekday week day.type

1 7.2 14 41 1 Tuesday 1 weekday

2 7.4 11 39 2 Wednesday 1 weekday

3 6.6 14 13 3 Thursday 1 weekday

4 6.5 22 47 4 Friday 1 weekday

5 3.1 5 4 5 Saturday 1 weekend

6 3.0 7 12 6 Sunday 1 weekendObviously, I’d like to know something about how all these variables correlate with one another. I could correlate hours with pay quite using cor(), like so:

> cor(work$hours,work$pay)

[1] 0.7604283But what if I wanted a quick and easy way to calculate all pairwise correlations between the numeric variables? I can’t just input the work data frame, because it contains two factor variables, weekday and day.type. If I try this, I get an error:

> cor(work)

Error in cor(work) : 'x' must be numericIt order to get the correlations that I want using the cor() function, is create a new data frame that doesn’t contain the factor variables, and then feed that new data frame into the cor() function. It’s not actually very hard to do that, and I’ll talk about how to do it properly in Section ??. But it would be nice to have some function that is smart enough to just ignore the factor variables. That’s where the correlate() function in the lsr package can be handy. If you feed it a data frame that contains factors, it knows to ignore them, and returns the pairwise correlations only between the numeric variables:

> correlate(work)

CORRELATIONS

============

- correlation type: pearson

- correlations shown only when both variables are numeric

hours tasks pay day weekday week day.type

hours . 0.800 0.760 -0.049 . 0.018 .

tasks 0.800 . 0.720 -0.072 . -0.013 .

pay 0.760 0.720 . 0.137 . 0.196 .

day -0.049 -0.072 0.137 . . 0.990 .

weekday . . . . . . .

week 0.018 -0.013 0.196 0.990 . . .

day.type . . . . . . .The output here shows a . whenever one of the variables is non-numeric. It also shows a . whenever a variable is correlated with itself (it’s not a meaningful thing to do). The correlate() function can also do Spearman correlations, by specifying the corr.method to use:

> correlate( work, corr.method="spearman" )

CORRELATIONS

============

- correlation type: spearman

- correlations shown only when both variables are numeric

hours tasks pay day weekday week day.type

hours . 0.805 0.745 -0.047 . 0.010 .

tasks 0.805 . 0.730 -0.068 . -0.008 .

pay 0.745 0.730 . 0.094 . 0.154 .

day -0.047 -0.068 0.094 . . 0.990 .

weekday . . . . . . .

week 0.010 -0.008 0.154 0.990 . . .

day.type . . . . . . .Obviously, there’s no new functionality in the correlate() function, and any advanced R user would be perfectly capable of using the cor() function to get these numbers out. But if you’re not yet comfortable with extracting a subset of a data frame, the correlate() function is for you.

8.4.8 Missing values in pairwise calculations

I mentioned earlier that the cor() function is a special case. It doesn’t have an na.rm argument, because the story becomes a lot more complicated when more than one variable is involved. What it does have is an argument called use which does roughly the same thing, but you need to think little more carefully about what you want this time. To illustrate the issues, let’s open up a data set that has missing values, parenthood2.Rdata. This file contains the same data as the original parenthood data, but with some values deleted. It contains a single data frame, parenthood2:

> load( "parenthood2.Rdata" )

> print( parenthood2 )

dan.sleep baby.sleep dan.grump day

1 7.59 NA 56 1

2 7.91 11.66 60 2

3 5.14 7.92 82 3

4 7.71 9.61 55 4

5 6.68 9.75 NA 5

6 5.99 5.04 72 6

BLAH BLAH BLAHIf I calculate my descriptive statistics using the describe() function

> describe( parenthood2 )

var n mean sd median trimmed mad min max BLAH

dan.sleep 1 91 6.98 1.02 7.03 7.02 1.13 4.84 9.00 BLAH

baby.sleep 2 89 8.11 2.05 8.20 8.13 2.28 3.25 12.07 BLAH

dan.grump 3 92 63.15 9.85 61.00 62.66 10.38 41.00 89.00 BLAH

day 4 100 50.50 29.01 50.50 50.50 37.06 1.00 100.00 BLAHwe can see from the n column that there are 9 missing values for dan.sleep, 11 missing values for baby.sleep and 8 missing values for dan.grump.116 Suppose what I would like is a correlation matrix. And let’s also suppose that I don’t bother to tell R how to handle those missing values. Here’s what happens:

> cor( parenthood2 )

dan.sleep baby.sleep dan.grump day

dan.sleep 1 NA NA NA

baby.sleep NA 1 NA NA

dan.grump NA NA 1 NA

day NA NA NA 1Annoying, but it kind of makes sense. If I don’t know what some of the values of dan.sleep and baby.sleep actually are, then I can’t possibly know what the correlation between these two variables is either, since the formula for the correlation coefficient makes use of every single observation in the data set. Once again, it makes sense: it’s just not particularly helpful.

To make R behave more sensibly in this situation, you need to specify the use argument to the cor() function. There are several different values that you can specify for this, but the two that we care most about in practice tend to be "complete.obs" and "pairwise.complete.obs". If we specify use = "complete.obs", R will completely ignore all cases (i.e., all rows in our parenthood2 data frame) that have any missing values at all. So, for instance, if you look back at the extract earlier when I used the head() function, notice that observation 1 (i.e., day 1) of the parenthood2 data set is missing the value for baby.sleep, but is otherwise complete? Well, if you choose use = "complete.obs" R will ignore that row completely: that is, even when it’s trying to calculate the correlation between dan.sleep and dan.grump, observation 1 will be ignored, because the value of baby.sleep is missing for that observation. Here’s what we get:

> cor(parenthood2, use = "complete.obs")

dan.sleep baby.sleep dan.grump day

dan.sleep 1.00000000 0.6394985 -0.89951468 0.06132891

baby.sleep 0.63949845 1.0000000 -0.58656066 0.14555814

dan.grump -0.89951468 -0.5865607 1.00000000 -0.06816586

day 0.06132891 0.1455581 -0.06816586 1.00000000The other possibility that we care about, and the one that tends to get used more often in practice, is to set use = "pairwise.complete.obs". When we do that, R only looks at the variables that it’s trying to correlate when determining what to drop. So, for instance, since the only missing value for observation 1 of parenthood2 is for baby.sleep R will only drop observation 1 when baby.sleep is one of the variables involved: and so R keeps observation 1 when trying to correlate dan.sleep and dan.grump. When we do it this way, here’s what we get:

> cor(parenthood2, use = "pairwise.complete.obs")

dan.sleep baby.sleep dan.grump day

dan.sleep 1.00000000 0.61472303 -0.903442442 -0.076796665

baby.sleep 0.61472303 1.00000000 -0.567802669 0.058309485

dan.grump -0.90344244 -0.56780267 1.000000000 0.005833399

day -0.07679667 0.05830949 0.005833399 1.000000000Similar, but not quite the same. It’s also worth noting that the correlate() function (in the lsr package) automatically uses the “pairwise complete” method:

> correlate(parenthood2)

CORRELATIONS

============

- correlation type: pearson

- correlations shown only when both variables are numeric

dan.sleep baby.sleep dan.grump day

dan.sleep . 0.615 -0.903 -0.077

baby.sleep 0.615 . -0.568 0.058

dan.grump -0.903 -0.568 . 0.006

day -0.077 0.058 0.006 .The two approaches have different strengths and weaknesses. The “pairwise complete” approach has the advantage that it keeps more observations, so you’re making use of more of your data and (as we’ll discuss in tedious detail in Chapter 4.2 and it improves the reliability of your estimated correlation. On the other hand, it means that every correlation in your correlation matrix is being computed from a slightly different set of observations, which can be awkward when you want to compare the different correlations that you’ve got.

So which method should you use? It depends a lot on why you think your values are missing, and probably depends a little on how paranoid you are. For instance, if you think that the missing values were “chosen” completely randomly117 then you’ll probably want to use the pairwise method. If you think that missing data are a cue to thinking that the whole observation might be rubbish (e.g., someone just selecting arbitrary responses in your questionnaire), but that there’s no pattern to which observations are “rubbish” then it’s probably safer to keep only those observations that are complete. If you think there’s something systematic going on, in that some observations are more likely to be missing than others, then you have a much trickier problem to solve, and one that is beyond the scope of this book.

8.5 Linear regression

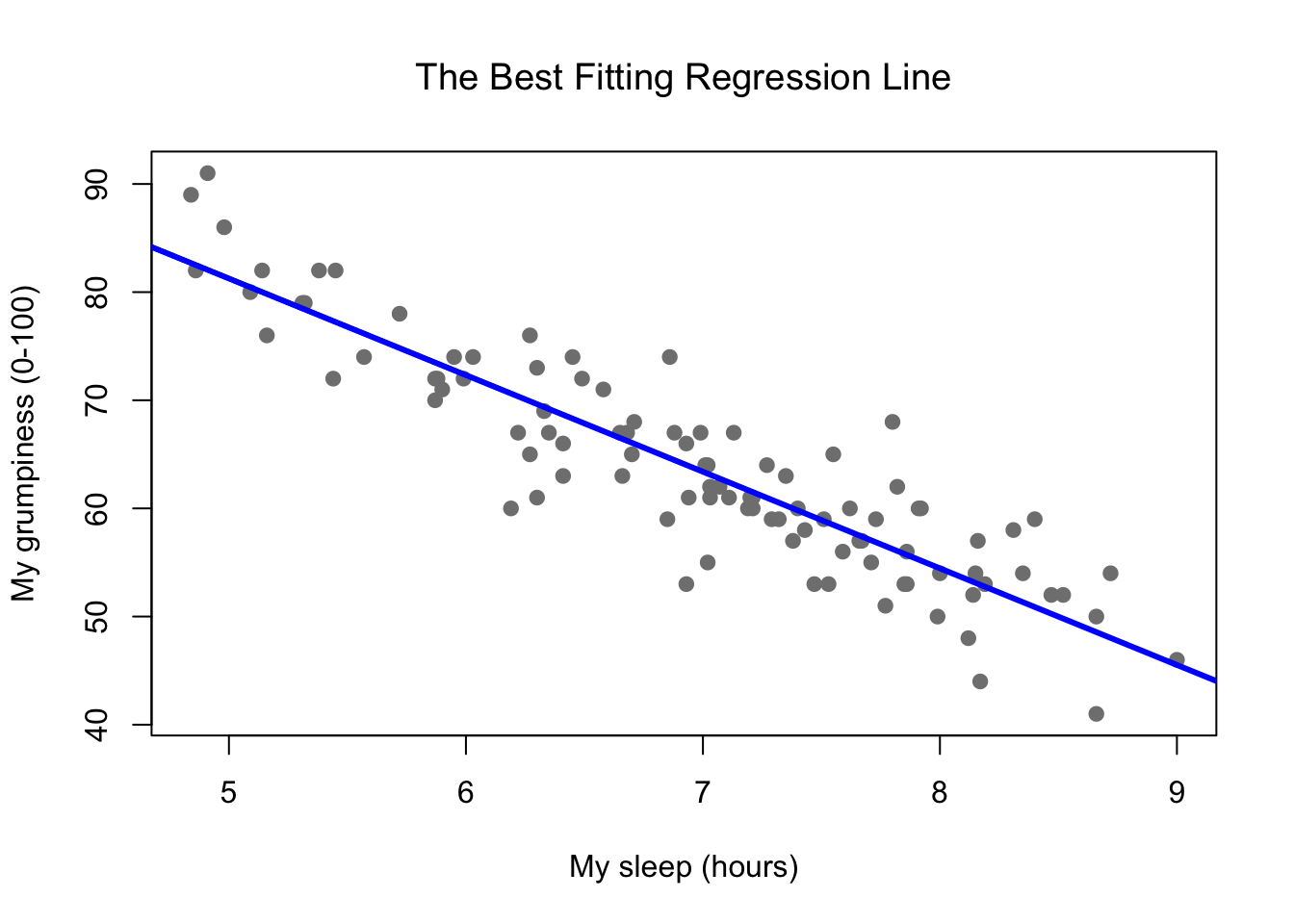

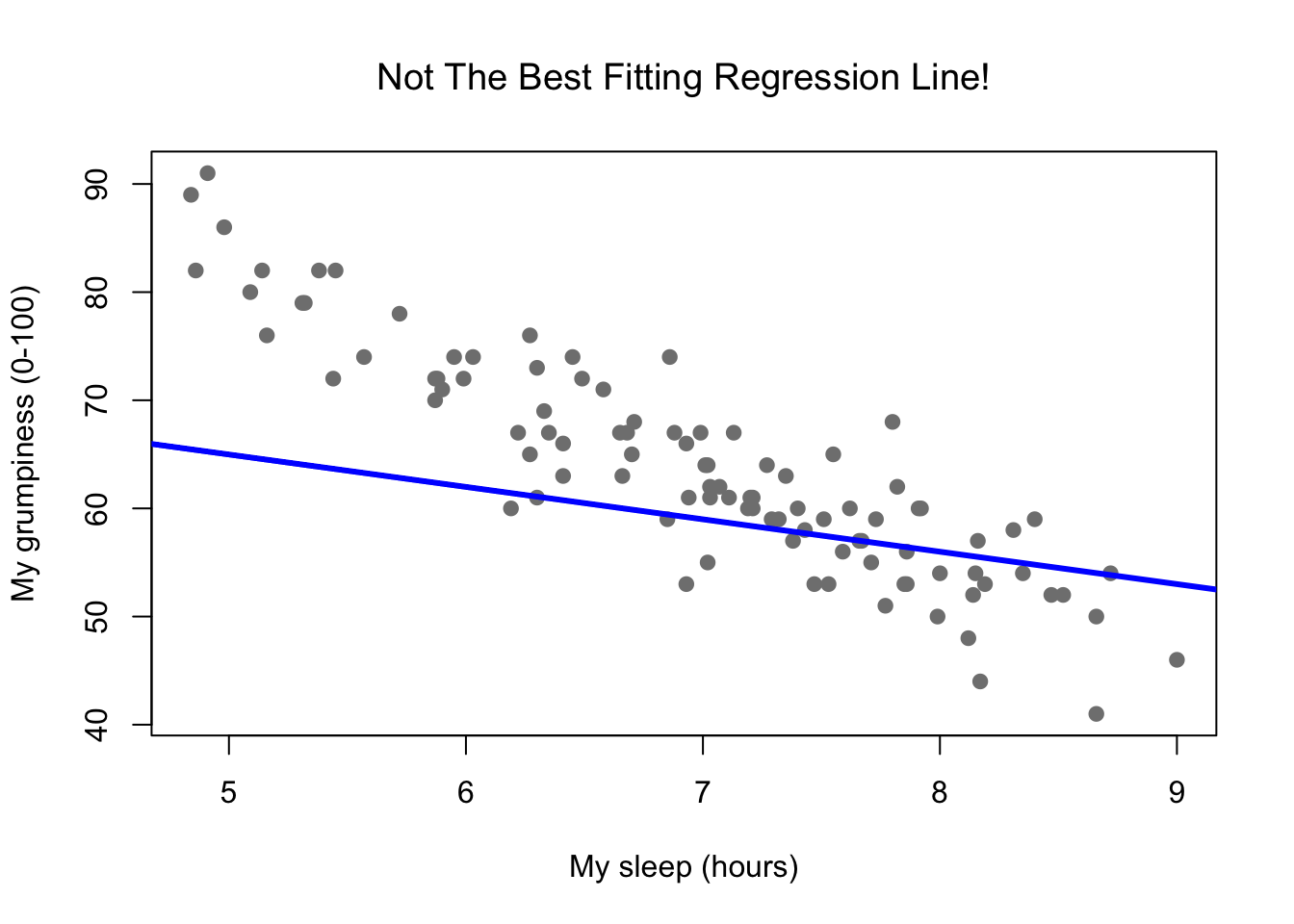

Figure 8.8: Scatterplot showing grumpiness as a function of hours slept.

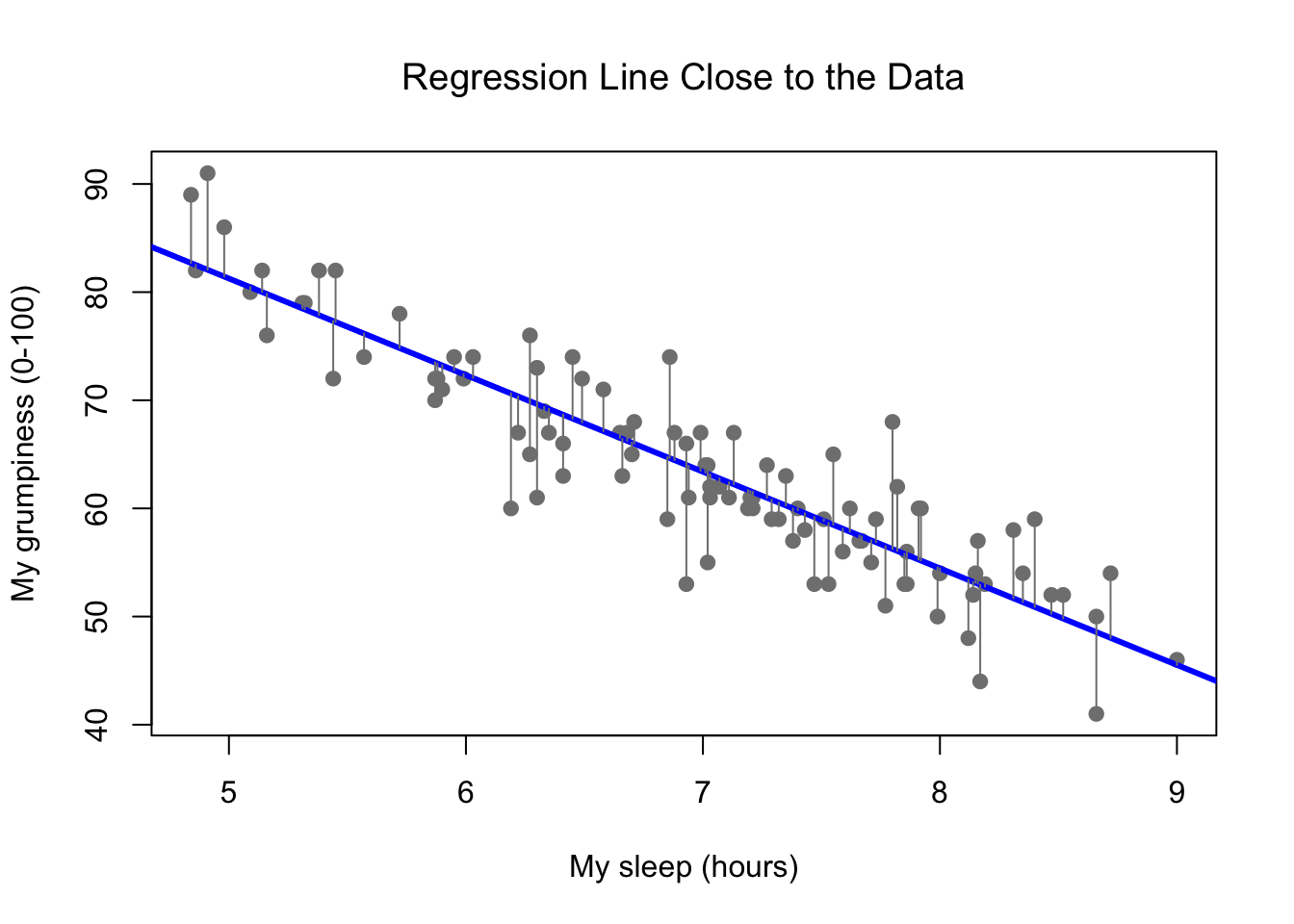

Figure 8.9: Panel a shows the sleep-grumpiness scatterplot from above with the best fitting regression line drawn over the top. Not surprisingly, the line goes through the middle of the data.

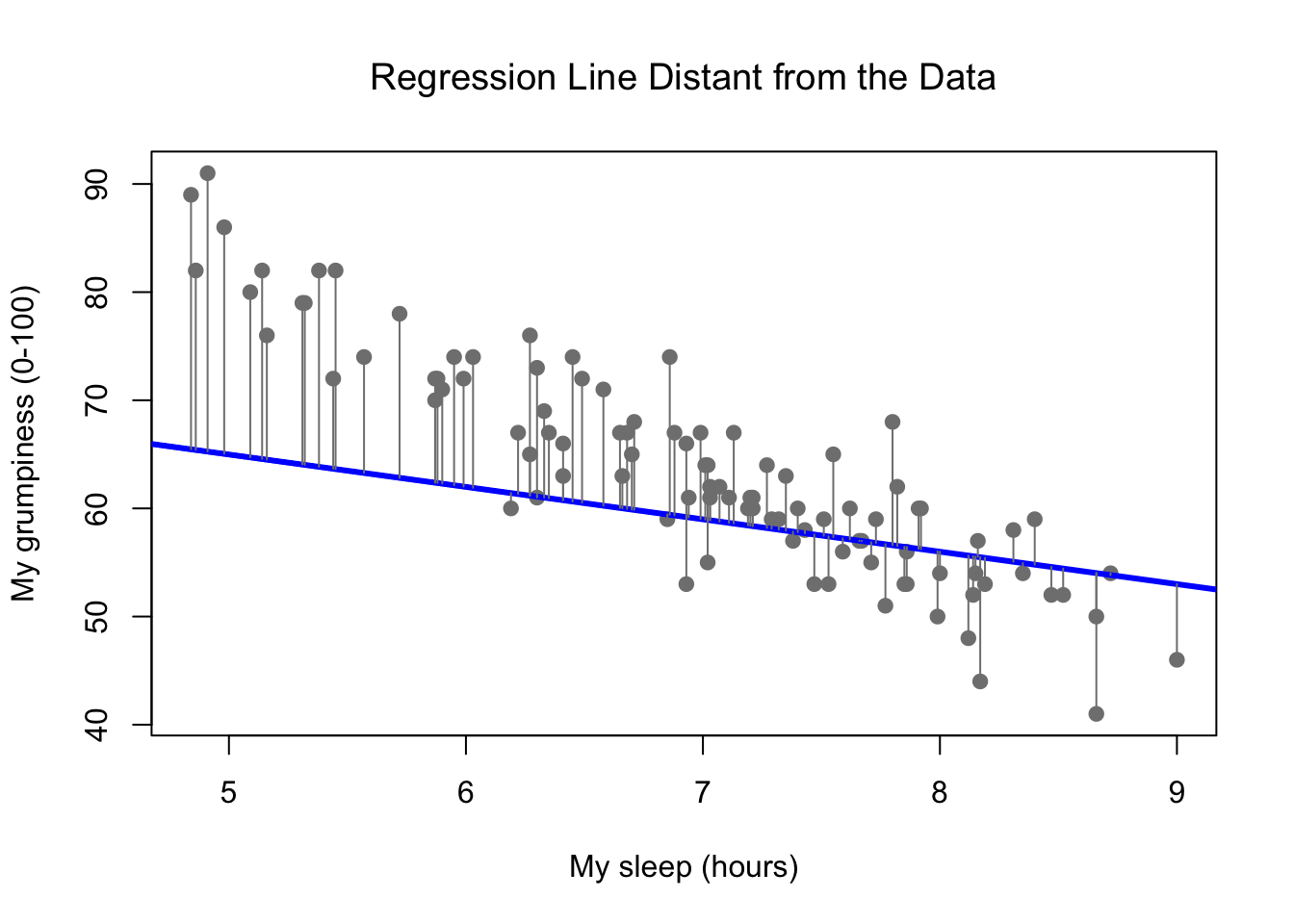

Figure 8.10: In contrast, this plot shows the same data, but with a very poor choice of regression line drawn over the top.

Since the basic ideas in regression are closely tied to correlation, we’ll return to the parenthood.Rdata file that we were using to illustrate how correlations work. Recall that, in this data set, we were trying to find out why Dan is so very grumpy all the time, and our working hypothesis was that I’m not getting enough sleep. We drew some scatterplots to help us examine the relationship between the amount of sleep I get, and my grumpiness the following day. The actual scatterplot that we draw is the one shown in Figure 8.8, and as we saw previously this corresponds to a correlation of \(r=-.90\), but what we find ourselves secretly imagining is something that looks closer to Figure 8.9. That is, we mentally draw a straight line through the middle of the data. In statistics, this line that we’re drawing is called a regression line. Notice that the regression line goes through the middle of the data. We don’t find ourselves imagining anything like the rather silly plot shown in Figure 8.10.

This is not highly surprising: the line that I’ve drawn in Figure 8.10 doesn’t “fit” the data very well, so it doesn’t make a lot of sense to propose it as a way of summarising the data, right? This is a very simple observation to make, but it turns out to be very powerful when we start trying to wrap just a little bit of maths around it. To do so, let’s start with a refresher of some high school maths. The formula for a straight line is usually written like this: \[ y = mx + c \] Or, at least, that’s what it was when I went to high school all those years ago. The two variables are \(x\) and \(y\), and we have two coefficients, \(m\) and \(c\). The coefficient \(m\) represents the slope of the line, and the coefficient \(c\) represents the \(y\)-intercept of the line. Digging further back into our decaying memories of high school (sorry, for some of us high school was a long time ago), we remember that the intercept is interpreted as “the value of \(y\) that you get when \(x=0\).” Similarly, a slope of \(m\) means that if you increase the \(x\)-value by 1 unit, then the \(y\)-value goes up by \(m\) units; a negative slope means that the \(y\)-value would go down rather than up. Ah yes, it’s all coming back to me now.

Now that we’ve remembered that, it should come as no surprise to discover that we use the exact same formula to describe a regression line. If \(Y\) is the outcome variable (the DV) and \(X\) is the predictor variable (the IV), then the formula that describes our regression is written like this: \[ \hat{Y_i} = b_1 X_i + b_0 \] Hm. Looks like the same formula, but there’s some extra frilly bits in this version. Let’s make sure we understand them. Firstly, notice that I’ve written \(X_i\) and \(Y_i\) rather than just plain old \(X\) and \(Y\). This is because we want to remember that we’re dealing with actual data. In this equation, \(X_i\) is the value of predictor variable for the \(i\)th observation (i.e., the number of hours of sleep that I got on day \(i\) of my little study), and \(Y_i\) is the corresponding value of the outcome variable (i.e., my grumpiness on that day). And although I haven’t said so explicitly in the equation, what we’re assuming is that this formula works for all observations in the data set (i.e., for all \(i\)). Secondly, notice that I wrote \(\hat{Y}_i\) and not \(Y_i\). This is because we want to make the distinction between the actual data \(Y_i\), and the estimate \(\hat{Y}_i\) (i.e., the prediction that our regression line is making). Thirdly, I changed the letters used to describe the coefficients from \(m\) and \(c\) to \(b_1\) and \(b_0\). That’s just the way that statisticians like to refer to the coefficients in a regression model. I’ve no idea why they chose \(b\), but that’s what they did. In any case \(b_0\) always refers to the intercept term, and \(b_1\) refers to the slope.

Excellent, excellent. Next, I can’t help but notice that – regardless of whether we’re talking about the good regression line or the bad one – the data don’t fall perfectly on the line. Or, to say it another way, the data \(Y_i\) are not identical to the predictions of the regression model \(\hat{Y_i}\). Since statisticians love to attach letters, names and numbers to everything, let’s refer to the difference between the model prediction and that actual data point as a residual, and we’ll refer to it as \(\epsilon_i\).118 Written using mathematics, the residuals are defined as: \[ \epsilon_i = Y_i - \hat{Y}_i \] which in turn means that we can write down the complete linear regression model as: \[ Y_i = b_1 X_i + b_0 + \epsilon_i \]

8.6 Estimating a linear regression model

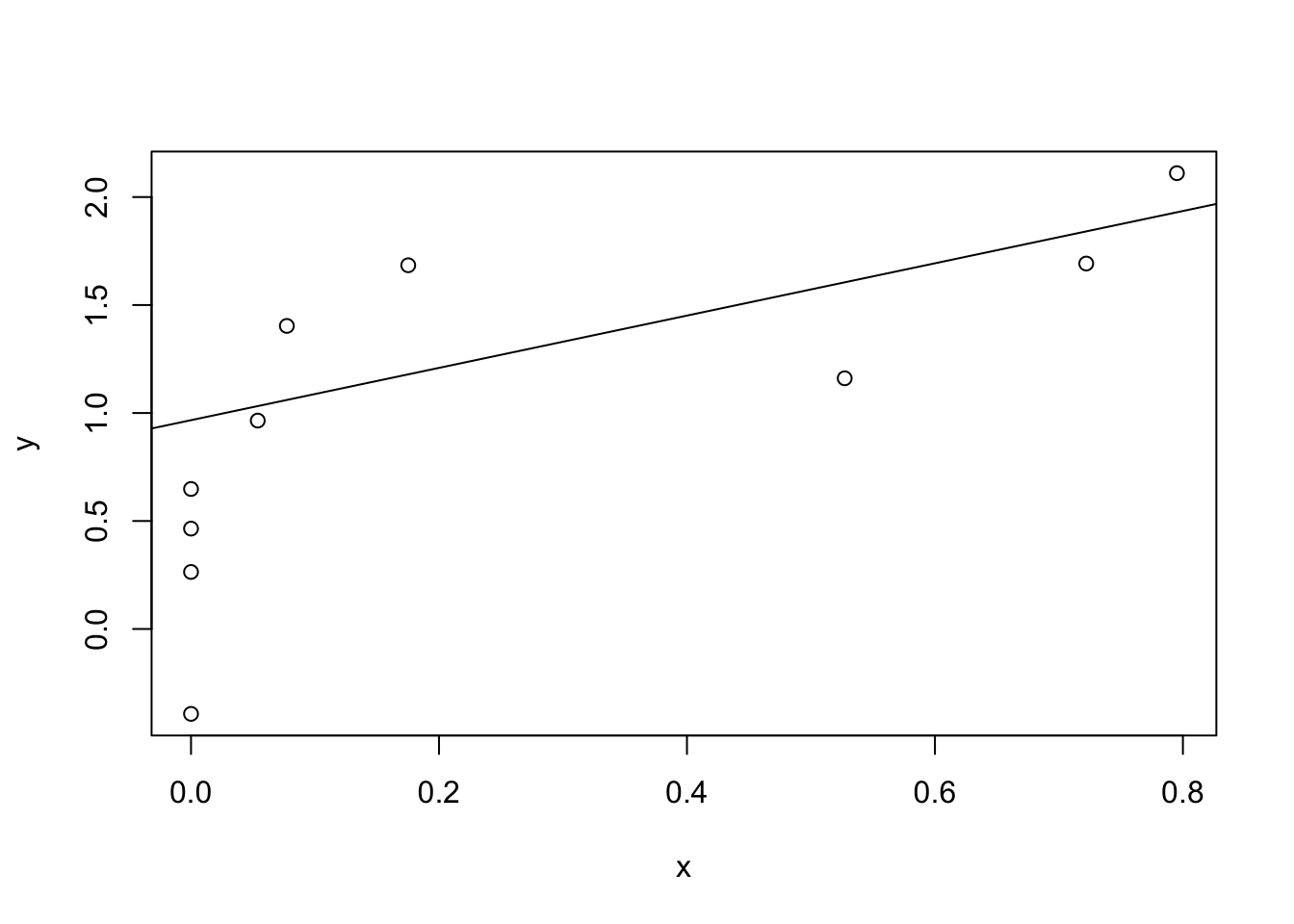

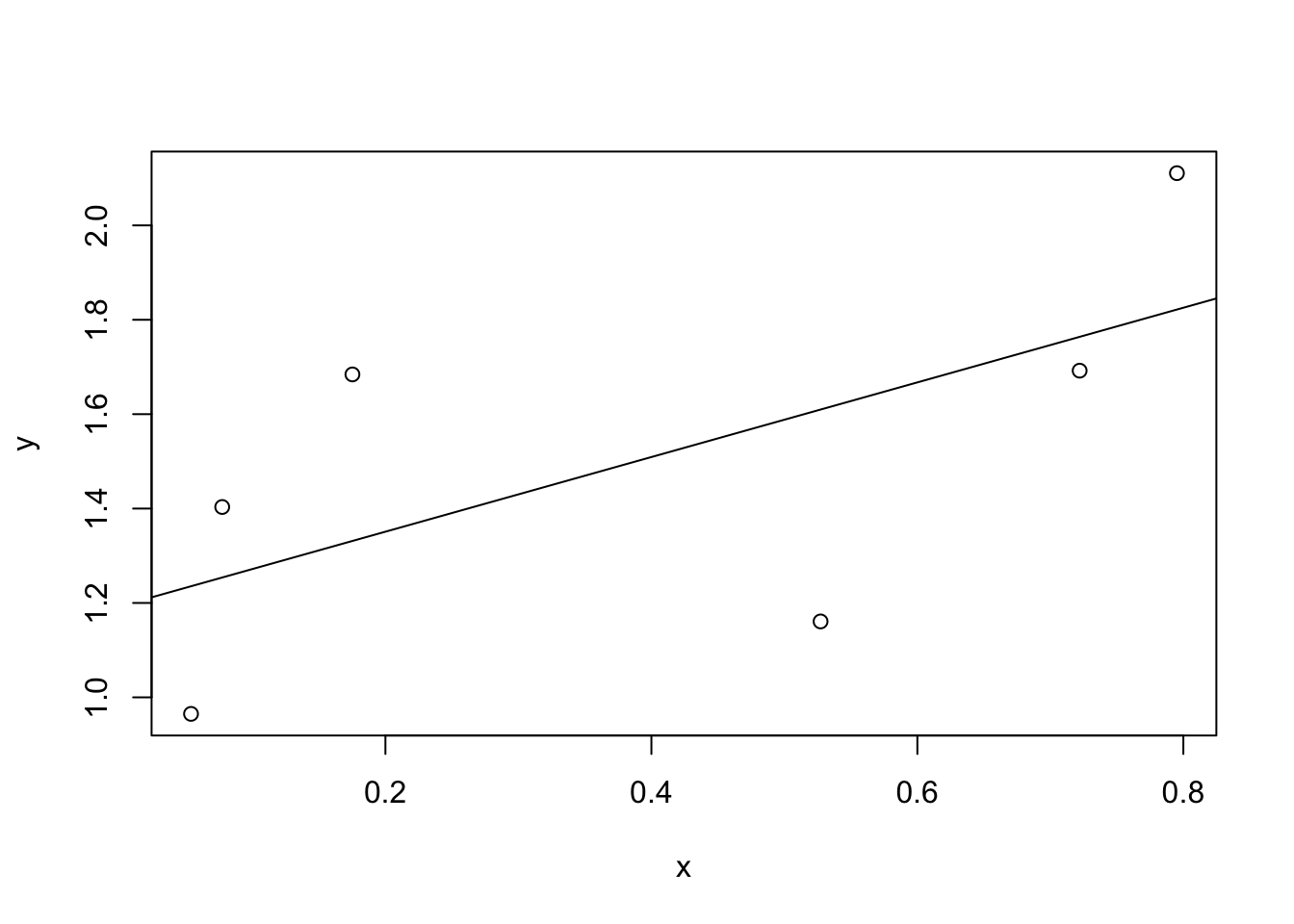

Figure 8.11: A depiction of the residuals associated with the best fitting regression line

Figure 8.12: The residuals associated with a poor regression line

Okay, now let’s redraw our pictures, but this time I’ll add some lines to show the size of the residual for all observations. When the regression line is good, our residuals (the lengths of the solid black lines) all look pretty small, as shown in Figure 8.11, but when the regression line is a bad one, the residuals are a lot larger, as you can see from looking at Figure 8.12. Hm. Maybe what we “want” in a regression model is small residuals. Yes, that does seem to make sense. In fact, I think I’ll go so far as to say that the “best fitting” regression line is the one that has the smallest residuals. Or, better yet, since statisticians seem to like to take squares of everything why not say that …

The estimated regression coefficients, \(\hat{b}_0\) and \(\hat{b}_1\) are those that minimise the sum of the squared residuals, which we could either write as \(\sum_i (Y_i - \hat{Y}_i)^2\) or as \(\sum_i {\epsilon_i}^2\).

Yes, yes that sounds even better. And since I’ve indented it like that, it probably means that this is the right answer. And since this is the right answer, it’s probably worth making a note of the fact that our regression coefficients are estimates (we’re trying to guess the parameters that describe a population!), which is why I’ve added the little hats, so that we get \(\hat{b}_0\) and \(\hat{b}_1\) rather than \(b_0\) and \(b_1\). Finally, I should also note that – since there’s actually more than one way to estimate a regression model – the more technical name for this estimation process is ordinary least squares (OLS) regression.

At this point, we now have a concrete definition for what counts as our “best” choice of regression coefficients, \(\hat{b}_0\) and \(\hat{b}_1\). The natural question to ask next is, if our optimal regression coefficients are those that minimise the sum squared residuals, how do we find these wonderful numbers? The actual answer to this question is complicated, and it doesn’t help you understand the logic of regression.119 As a result, this time I’m going to let you off the hook. Instead of showing you how to do it the long and tedious way first, and then “revealing” the wonderful shortcut that R provides you with, let’s cut straight to the chase… and use the lm() function (short for “linear model”) to do all the heavy lifting.

8.6.1 Using the lm() function

The lm() function is a fairly complicated one: if you type ?lm, the help files will reveal that there are a lot of arguments that you can specify, and most of them won’t make a lot of sense to you. At this stage however, there’s really only two of them that you care about, and as it turns out you’ve seen them before:

formula. A formula that specifies the regression model. For the simple linear regression models that we’ve talked about so far, in which you have a single predictor variable as well as an intercept term, this formula is of the formoutcome ~ predictor. However, more complicated formulas are allowed, and we’ll discuss them later.data. The data frame containing the variables.

The output of the lm() function is a fairly complicated object, with quite a lot of technical information buried under the hood. Because this technical information is used by other functions, it’s generally a good idea to create a variable that stores the results of your regression. With this in mind, to run my linear regression, the command I want to use is this:

regression.1 <- lm( formula = dan.grump ~ dan.sleep,

data = parenthood ) Note that I used dan.grump ~ dan.sleep as the formula: in the model that I’m trying to estimate, dan.grump is the outcome variable, and dan.sleep is the predictor variable. It’s always a good idea to remember which one is which! Anyway, what this does is create an “lm object” (i.e., a variable whose class is "lm") called regression.1. Let’s have a look at what happens when we print() it out:

print( regression.1 )##

## Call:

## lm(formula = dan.grump ~ dan.sleep, data = parenthood)

##

## Coefficients:

## (Intercept) dan.sleep

## 125.956 -8.937This looks promising. There’s two separate pieces of information here. Firstly, R is politely reminding us what the command was that we used to specify the model in the first place, which can be helpful. More importantly from our perspective, however, is the second part, in which R gives us the intercept \(\hat{b}_0 = 125.96\) and the slope \(\hat{b}_1 = -8.94\). In other words, the best-fitting regression line that I plotted in Figure 8.9 has this formula: \[ \hat{Y}_i = -8.94 \ X_i + 125.96 \]

8.6.2 Interpreting the estimated model

The most important thing to be able to understand is how to interpret these coefficients. Let’s start with \(\hat{b}_1\), the slope. If we remember the definition of the slope, a regression coefficient of \(\hat{b}_1 = -8.94\) means that if I increase \(X_i\) by 1, then I’m decreasing \(Y_i\) by 8.94. That is, each additional hour of sleep that I gain will improve my mood, reducing my grumpiness by 8.94 grumpiness points. What about the intercept? Well, since \(\hat{b}_0\) corresponds to “the expected value of \(Y_i\) when \(X_i\) equals 0,” it’s pretty straightforward. It implies that if I get zero hours of sleep (\(X_i =0\)) then my grumpiness will go off the scale, to an insane value of (\(Y_i = 125.96\)). Best to be avoided, I think.

8.7 Multiple linear regression

The simple linear regression model that we’ve discussed up to this point assumes that there’s a single predictor variable that you’re interested in, in this case dan.sleep. In fact, up to this point, every statistical tool that we’ve talked about has assumed that your analysis uses one predictor variable and one outcome variable. However, in many (perhaps most) research projects you actually have multiple predictors that you want to examine. If so, it would be nice to be able to extend the linear regression framework to be able to include multiple predictors. Perhaps some kind of multiple regression model would be in order?

Multiple regression is conceptually very simple. All we do is add more terms to our regression equation. Let’s suppose that we’ve got two variables that we’re interested in; perhaps we want to use both dan.sleep and baby.sleep to predict the dan.grump variable. As before, we let \(Y_i\) refer to my grumpiness on the \(i\)-th day. But now we have two \(X\) variables: the first corresponding to the amount of sleep I got and the second corresponding to the amount of sleep my son got. So we’ll let \(X_{i1}\) refer to the hours I slept on the \(i\)-th day, and \(X_{i2}\) refers to the hours that the baby slept on that day. If so, then we can write our regression model like this:

\[

Y_i = b_2 X_{i2} + b_1 X_{i1} + b_0 + \epsilon_i

\]

As before, \(\epsilon_i\) is the residual associated with the \(i\)-th observation, \(\epsilon_i = {Y}_i - \hat{Y}_i\). In this model, we now have three coefficients that need to be estimated: \(b_0\) is the intercept, \(b_1\) is the coefficient associated with my sleep, and \(b_2\) is the coefficient associated with my son’s sleep. However, although the number of coefficients that need to be estimated has changed, the basic idea of how the estimation works is unchanged: our estimated coefficients \(\hat{b}_0\), \(\hat{b}_1\) and \(\hat{b}_2\) are those that minimise the sum squared residuals.

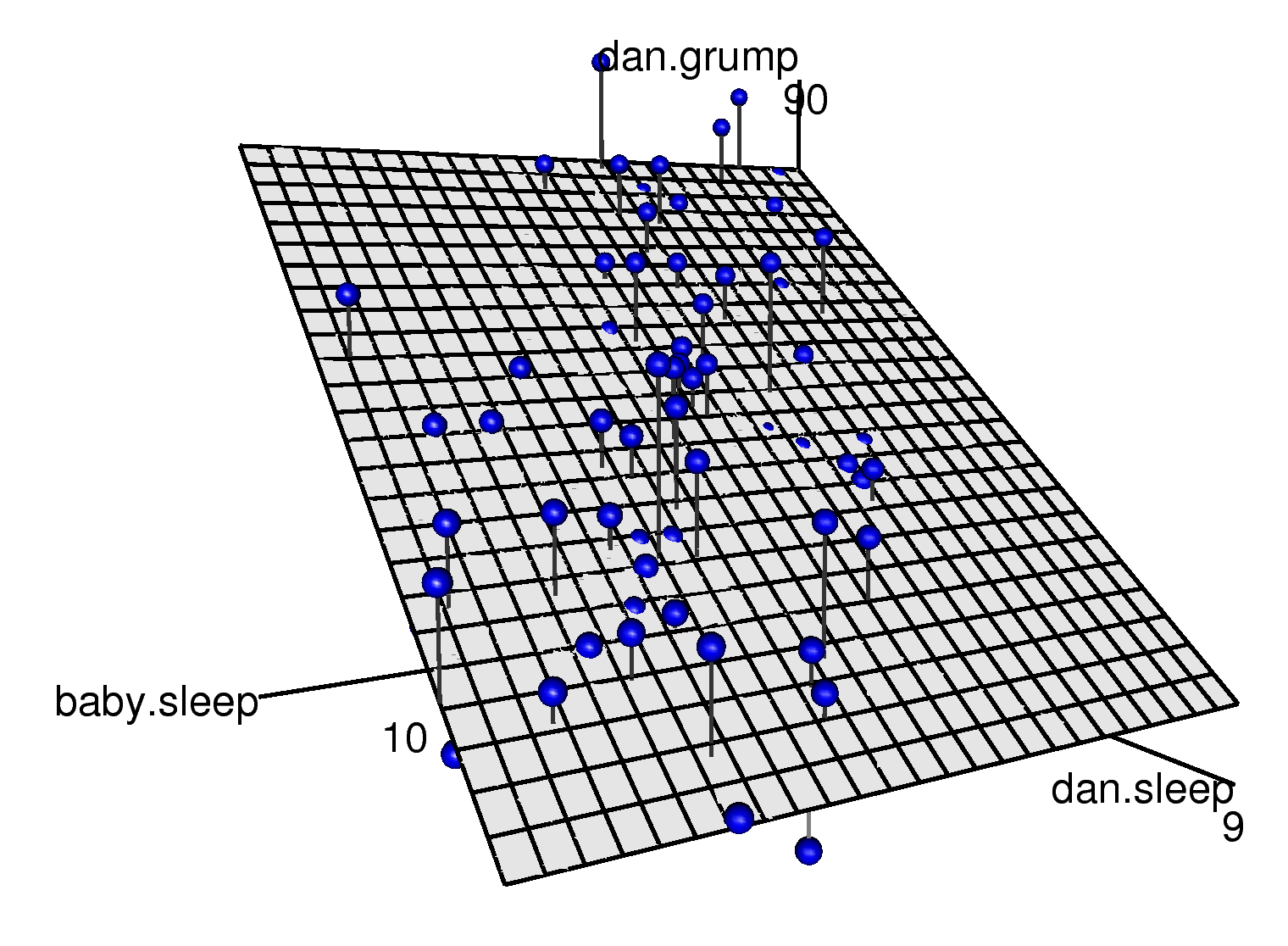

Figure 8.13: A 3D visualisation of a multiple regression model. There are two predictors in the model, dan.sleep and baby.sleep; the outcome variable is dan.grump. Together, these three variables form a 3D space: each observation (blue dots) is a point in this space. In much the same way that a simple linear regression model forms a line in 2D space, this multiple regression model forms a plane in 3D space. When we estimate the regression coefficients, what we’re trying to do is find a plane that is as close to all the blue dots as possible.

8.7.1 Doing it in R

Multiple regression in R is no different to simple regression: all we have to do is specify a more complicated formula when using the lm() function. For example, if we want to use both dan.sleep and baby.sleep as predictors in our attempt to explain why I’m so grumpy, then the formula we need is this:

dan.grump ~ dan.sleep + baby.sleepNotice that, just like last time, I haven’t explicitly included any reference to the intercept term in this formula; only the two predictor variables and the outcome. By default, the lm() function assumes that the model should include an intercept (though you can get rid of it if you want). In any case, I can create a new regression model – which I’ll call regression.2 – using the following command:

regression.2 <- lm( formula = dan.grump ~ dan.sleep + baby.sleep,

data = parenthood )And just like last time, if we print() out this regression model we can see what the estimated regression coefficients are:

print( regression.2 )##

## Call:

## lm(formula = dan.grump ~ dan.sleep + baby.sleep, data = parenthood)

##

## Coefficients:

## (Intercept) dan.sleep baby.sleep

## 125.96557 -8.95025 0.01052The coefficient associated with dan.sleep is quite large, suggesting that every hour of sleep I lose makes me a lot grumpier. However, the coefficient for baby.sleep is very small, suggesting that it doesn’t really matter how much sleep my son gets; not really. What matters as far as my grumpiness goes is how much sleep I get. To get a sense of what this multiple regression model looks like, Figure 8.13 shows a 3D plot that plots all three variables, along with the regression model itself.

8.7.2 Formula for the general case

The equation that I gave above shows you what a multiple regression model looks like when you include two predictors. Not surprisingly, then, if you want more than two predictors all you have to do is add more \(X\) terms and more \(b\) coefficients. In other words, if you have \(K\) predictor variables in the model then the regression equation looks like this: \[ Y_i = \left( \sum_{k=1}^K b_{k} X_{ik} \right) + b_0 + \epsilon_i \]

8.8 Quantifying the fit of the regression model

So we now know how to estimate the coefficients of a linear regression model. The problem is, we don’t yet know if this regression model is any good. For example, the regression.1 model claims that every hour of sleep will improve my mood by quite a lot, but it might just be rubbish. Remember, the regression model only produces a prediction \(\hat{Y}_i\) about what my mood is like: my actual mood is \(Y_i\). If these two are very close, then the regression model has done a good job. If they are very different, then it has done a bad job.

8.8.1 The \(R^2\) value

Once again, let’s wrap a little bit of mathematics around this. Firstly, we’ve got the sum of the squared residuals:

\[

\mbox{SS}_{res} = \sum_i (Y_i - \hat{Y}_i)^2

\]

which we would hope to be pretty small. Specifically, what we’d like is for it to be very small in comparison to the total variability in the outcome variable,

\[

\mbox{SS}_{tot} = \sum_i (Y_i - \bar{Y})^2

\]

While we’re here, let’s calculate these values in R. Firstly, in order to make my R commands look a bit more similar to the mathematical equations, I’ll create variables X and Y:

X <- parenthood$dan.sleep # the predictor

Y <- parenthood$dan.grump # the outcomeNow that we’ve done this, let’s calculate the \(\hat{Y}\) values and store them in a variable called Y.pred. For the simple model that uses only a single predictor, regression.1, we would do the following:

Y.pred <- -8.94 * X + 125.97Okay, now that we’ve got a variable which stores the regression model predictions for how grumpy I will be on any given day, let’s calculate our sum of squared residuals. We would do that using the following command:

SS.resid <- sum( (Y - Y.pred)^2 )

print( SS.resid )## [1] 1838.722Wonderful. A big number that doesn’t mean very much. Still, let’s forge boldly onwards anyway, and calculate the total sum of squares as well. That’s also pretty simple:

SS.tot <- sum( (Y - mean(Y))^2 )

print( SS.tot )## [1] 9998.59Hm. Well, it’s a much bigger number than the last one, so this does suggest that our regression model was making good predictions. But it’s not very interpretable.

Perhaps we can fix this. What we’d like to do is to convert these two fairly meaningless numbers into one number. A nice, interpretable number, which for no particular reason we’ll call \(R^2\). What we would like is for the value of \(R^2\) to be equal to 1 if the regression model makes no errors in predicting the data. In other words, if it turns out that the residual errors are zero – that is, if \(\mbox{SS}_{res} = 0\) – then we expect \(R^2 = 1\). Similarly, if the model is completely useless, we would like \(R^2\) to be equal to 0. What do I mean by “useless?” Tempting as it is demand that the regression model move out of the house, cut its hair and get a real job, I’m probably going to have to pick a more practical definition: in this case, all I mean is that the residual sum of squares is no smaller than the total sum of squares, \(\mbox{SS}_{res} = \mbox{SS}_{tot}\). Wait, why don’t we do exactly that? The formula that provides us with out \(R^2\) value is pretty simple to write down, \[ R^2 = 1 - \frac{\mbox{SS}_{res}}{\mbox{SS}_{tot}} \] and equally simple to calculate in R:

R.squared <- 1 - (SS.resid / SS.tot)

print( R.squared )## [1] 0.8161018The \(R^2\) value, sometimes called the coefficient of determination120 has a simple interpretation: it is the proportion of the variance in the outcome variable that can be accounted for by the predictor. So in this case, the fact that we have obtained \(R^2 = .816\) means that the predictor (my.sleep) explains 81.6% of the variance in the outcome (my.grump).

Naturally, you don’t actually need to type in all these commands yourself if you want to obtain the \(R^2\) value for your regression model. As we’ll see later on in Section 8.9.3, all you need to do is use the summary() function. However, let’s put that to one side for the moment. There’s another property of \(R^2\) that I want to point out.

8.8.2 The relationship between regression and correlation

At this point we can revisit my earlier claim that regression, in this very simple form that I’ve discussed so far, is basically the same thing as a correlation. Previously, we used the symbol \(r\) to denote a Pearson correlation. Might there be some relationship between the value of the correlation coefficient \(r\) and the \(R^2\) value from linear regression? Of course there is: the squared correlation \(r^2\) is identical to the \(R^2\) value for a linear regression with only a single predictor. To illustrate this, here’s the squared correlation:

r <- cor(X, Y) # calculate the correlation

print( r^2 ) # print the squared correlation## [1] 0.8161027Yep, same number. In other words, running a Pearson correlation is more or less equivalent to running a linear regression model that uses only one predictor variable.

8.8.3 The adjusted \(R^2\) value

One final thing to point out before moving on. It’s quite common for people to report a slightly different measure of model performance, known as “adjusted \(R^2\).” The motivation behind calculating the adjusted \(R^2\) value is the observation that adding more predictors into the model will always call the \(R^2\) value to increase (or at least not decrease). The adjusted \(R^2\) value introduces a slight change to the calculation, as follows. For a regression model with \(K\) predictors, fit to a data set containing \(N\) observations, the adjusted \(R^2\) is: \[ \mbox{adj. } R^2 = 1 - \left(\frac{\mbox{SS}_{res}}{\mbox{SS}_{tot}} \times \frac{N-1}{N-K-1} \right) \] This adjustment is an attempt to take the degrees of freedom into account. The big advantage of the adjusted \(R^2\) value is that when you add more predictors to the model, the adjusted \(R^2\) value will only increase if the new variables improve the model performance more than you’d expect by chance. The big disadvantage is that the adjusted \(R^2\) value can’t be interpreted in the elegant way that \(R^2\) can. \(R^2\) has a simple interpretation as the proportion of variance in the outcome variable that is explained by the regression model; to my knowledge, no equivalent interpretation exists for adjusted \(R^2\).

An obvious question then, is whether you should report \(R^2\) or adjusted \(R^2\). This is probably a matter of personal preference. If you care more about interpretability, then \(R^2\) is better. If you care more about correcting for bias, then adjusted \(R^2\) is probably better. Speaking just for myself, I prefer \(R^2\): my feeling is that it’s more important to be able to interpret your measure of model performance. Besides, as we’ll see in Section 8.9, if you’re worried that the improvement in \(R^2\) that you get by adding a predictor is just due to chance and not because it’s a better model, well, we’ve got hypothesis tests for that.

8.9 Hypothesis tests for regression models

So far we’ve talked about what a regression model is, how the coefficients of a regression model are estimated, and how we quantify the performance of the model (the last of these, incidentally, is basically our measure of effect size). The next thing we need to talk about is hypothesis tests. There are two different (but related) kinds of hypothesis tests that we need to talk about: those in which we test whether the regression model as a whole is performing significantly better than a null model; and those in which we test whether a particular regression coefficient is significantly different from zero.

At this point, you’re probably groaning internally, thinking that I’m going to introduce a whole new collection of tests…Me too. I’m so sick of hypothesis tests that I’m going to shamelessly reuse the \(F\)-test from ANOVA and the \(t\)-test. In fact, all I’m going to do in this section is show you how those tests are imported wholesale into the regression framework.

8.9.1 Testing the model as a whole: The omnibus test

Okay, suppose you’ve estimated your regression model. The first hypothesis test you might want to try is one in which the null hypothesis that there is no relationship between the predictors and the outcome, and the alternative hypothesis is that the data are distributed in exactly the way that the regression model predicts. Formally, our “null model” corresponds to the fairly trivial “regression” model in which we include 0 predictors, and only include the intercept term \(b_0\) \[ H_0: Y_i = b_0 + \epsilon_i \] If our regression model has \(K\) predictors, the “alternative model” is described using the usual formula for a multiple regression model: \[ H_1: Y_i = \left( \sum_{k=1}^K b_{k} X_{ik} \right) + b_0 + \epsilon_i \]

How can we test these two hypotheses against each other? The trick is to understand that just like we did with ANOVA, it’s possible to divide up the total variance \(\mbox{SS}_{tot}\) into the sum of the residual variance \(\mbox{SS}_{res}\) and the regression model variance \(\mbox{SS}_{mod}\). I’ll skip over the technicalities, since we will cover most of them in a future ANOVA chapter, and just note that the sum of squares for the model is equal to the total sum of squares minus sum of squares for the residual: \[ \mbox{SS}_{mod} = \mbox{SS}_{tot} - \mbox{SS}_{res} \] And, just like we will do with the ANOVA, we can convert the sums of squares in to mean squares by dividing by the degrees of freedom. \[ \begin{array}{rcl} \mbox{MS}_{mod} &=& \displaystyle\frac{\mbox{SS}_{mod} }{df_{mod}} \\ \\ \mbox{MS}_{res} &=& \displaystyle\frac{\mbox{SS}_{res} }{df_{res} } \end{array} \]

So, how many degrees of freedom do we have? As you might expect, the \(df\) associated with the model is closely tied to the number of predictors that we’ve included. In fact, it turns out that \(df_{mod} = K\). For the residuals, the total degrees of freedom is \(df_{res} = N -K - 1\).

Now that we’ve got our mean square values, you’re probably going to be entirely unsurprised to discover that we can calculate an \(F\)-statistic like this: \[ F = \frac{\mbox{MS}_{mod}}{\mbox{MS}_{res}} \] and the degrees of freedom associated with this are \(K\) and \(N-K-1\). This \(F\) statistic has exactly the same interpretation as for ANOVA. Large \(F\) values indicate that the null hypothesis is performing poorly in comparison to the alternative hypothesis. And since we already did some tedious “do it the long way” calculations back then, I won’t waste your time repeating them. In a moment I’ll show you how to do the test in R the easy way, but first, let’s have a look at the tests for the individual regression coefficients.

8.9.2 Tests for individual coefficients

The \(F\)-test that we’ve just introduced is useful for checking that the model as a whole is performing better than chance. This is important: if your regression model doesn’t produce a significant result for the \(F\)-test then you probably don’t have a very good regression model (or, quite possibly, you don’t have very good data). However, while failing this test is a pretty strong indicator that the model has problems, passing the test (i.e., rejecting the null) doesn’t imply that the model is good! Why is that, you might be wondering? The answer to that can be found by looking at the coefficients for the regression.2 model:

print( regression.2 ) ##

## Call:

## lm(formula = dan.grump ~ dan.sleep + baby.sleep, data = parenthood)

##

## Coefficients:

## (Intercept) dan.sleep baby.sleep

## 125.96557 -8.95025 0.01052I can’t help but notice that the estimated regression coefficient for the baby.sleep variable is tiny (0.01), relative to the value that we get for dan.sleep (-8.95). Given that these two variables are absolutely on the same scale (they’re both measured in “hours slept”), I find this suspicious. In fact, I’m beginning to suspect that it’s really only the amount of sleep that I get that matters in order to predict my grumpiness.

Once again, we can reuse a hypothesis test that we discussed earlier, this time the \(t\)-test. The test that we’re interested has a null hypothesis that the true regression coefficient is zero (\(b = 0\)), which is to be tested against the alternative hypothesis that it isn’t (\(b \neq 0\)). That is: \[ \begin{array}{rl} H_0: & b = 0 \\ H_1: & b \neq 0 \end{array} \] How can we test this? Well, if the central limit theorem is kind to us, we might be able to guess that the sampling distribution of \(\hat{b}\), the estimated regression coefficient, is a normal distribution with mean centred on \(b\). What that would mean is that if the null hypothesis were true, then the sampling distribution of \(\hat{b}\) has mean zero and unknown standard deviation. Assuming that we can come up with a good estimate for the standard error of the regression coefficient, \(\mbox{SE}({\hat{b}})\), then we’re in luck. That’s exactly the situation for which we introduced the one-sample \(t\) way back in Chapter 9. So let’s define a \(t\)-statistic like this, \[ t = \frac{\hat{b}}{\mbox{SE}({\hat{b})}} \] I’ll skip over the reasons why, but our degrees of freedom in this case are \(df = N- K- 1\). Irritatingly, the estimate of the standard error of the regression coefficient, \(\mbox{SE}({\hat{b}})\), is not as easy to calculate as the standard error of the mean that we used for the simpler \(t\)-tests and \(z\)-tests. In fact, the formula is somewhat ugly, and not terribly helpful to look at. For our purposes it’s sufficient to point out that the standard error of the estimated regression coefficient depends on both the predictor and outcome variables, and is somewhat sensitive to violations of the homogeneity of variance assumption (discussed shortly).

In any case, this \(t\)-statistic can be interpreted in the same way as any \(t\)-statistic. Assuming that you have a two-sided alternative (i.e., you don’t really care if \(b >0\) or \(b < 0\)), then it’s the extreme values of \(t\) (i.e., a lot less than zero or a lot greater than zero) that suggest that you should reject the null hypothesis.

8.9.3 Running the hypothesis tests in R

To compute all of the quantities that we have talked about so far, all you need to do is ask for a summary() of your regression model. Since I’ve been using regression.2 as my example, let’s do that:

summary( regression.2 )##

## Call:

## lm(formula = dan.grump ~ dan.sleep + baby.sleep, data = parenthood)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11.0345 -2.2198 -0.4016 2.6775 11.7496

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 125.96557 3.04095 41.423 <2e-16 ***

## dan.sleep -8.95025 0.55346 -16.172 <2e-16 ***

## baby.sleep 0.01052 0.27106 0.039 0.969

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 4.354 on 97 degrees of freedom

## Multiple R-squared: 0.8161, Adjusted R-squared: 0.8123

## F-statistic: 215.2 on 2 and 97 DF, p-value: < 2.2e-16The output that this command produces is pretty dense, but we’ve already discussed everything of interest in it, so what I’ll do is go through it line by line. The first line reminds us of what the actual regression model is:

Call:

lm(formula = dan.grump ~ dan.sleep + baby.sleep, data = parenthood)You can see why this is handy, since it was a little while back when we actually created the regression.2 model, and so it’s nice to be reminded of what it was we were doing. The next part provides a quick summary of the residuals (i.e., the \(\epsilon_i\) values),

Residuals:

Min 1Q Median 3Q Max

-11.0345 -2.2198 -0.4016 2.6775 11.7496 which can be convenient as a quick and dirty check that the model is okay. Remember, we did assume that these residuals were normally distributed, with mean 0. In particular it’s worth quickly checking to see if the median is close to zero, and to see if the first quartile is about the same size as the third quartile. If they look badly off, there’s a good chance that the assumptions of regression are violated. These ones look pretty nice to me, so let’s move on to the interesting stuff. The next part of the R output looks at the coefficients of the regression model:

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 125.96557 3.04095 41.423 <2e-16 ***

dan.sleep -8.95025 0.55346 -16.172 <2e-16 ***

baby.sleep 0.01052 0.27106 0.039 0.969

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1Each row in this table refers to one of the coefficients in the regression model. The first row is the intercept term, and the later ones look at each of the predictors. The columns give you all of the relevant information. The first column is the actual estimate of \(b\) (e.g., 125.96 for the intercept, and -8.9 for the dan.sleep predictor). The second column is the standard error estimate \(\hat\sigma_b\). The third column gives you the \(t\)-statistic, and it’s worth noticing that in this table \(t= \hat{b}/\mbox{SE}({\hat{b}})\) every time. Finally, the fourth column gives you the actual \(p\) value for each of these tests.121 The only thing that the table itself doesn’t list is the degrees of freedom used in the \(t\)-test, which is always \(N-K-1\) and is listed immediately below, in this line:

Residual standard error: 4.354 on 97 degrees of freedomThe value of \(df = 97\) is equal to \(N-K-1\), so that’s what we use for our \(t\)-tests. In the final part of the output we have the \(F\)-test and the \(R^2\) values which assess the performance of the model as a whole

Residual standard error: 4.354 on 97 degrees of freedom

Multiple R-squared: 0.8161, Adjusted R-squared: 0.8123

F-statistic: 215.2 on 2 and 97 DF, p-value: < 2.2e-16 So in this case, the model performs significantly better than you’d expect by chance (\(F(2,97) = 215.2\), \(p<.001\)), which isn’t all that surprising: the \(R^2 = .812\) value indicate that the regression model accounts for 81.2% of the variability in the outcome measure. However, when we look back up at the \(t\)-tests for each of the individual coefficients, we lack evidence that the baby.sleep variable has a significant effect; all the work could be done by the dan.sleep variable. Taken together, these results suggest that regression.2 is actually the wrong model for the data: you’d probably be better off dropping the baby.sleep predictor entirely. In other words, the regression.1 model that we started with is the better model.

8.10 Testing the significance of a correlation

8.10.1 Hypothesis tests for a single correlation

I don’t want to spend too much time on this, but it’s worth very briefly returning to the point I made earlier, that Pearson correlations are basically the same thing as linear regressions with only a single predictor added to the model. What this means is that the hypothesis tests that I just described in a regression context can also be applied to correlation coefficients. To see this, let’s take a summary() of the regression.1 model:

summary( regression.1 )##

## Call:

## lm(formula = dan.grump ~ dan.sleep, data = parenthood)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11.025 -2.213 -0.399 2.681 11.750

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 125.9563 3.0161 41.76 <2e-16 ***

## dan.sleep -8.9368 0.4285 -20.85 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 4.332 on 98 degrees of freedom

## Multiple R-squared: 0.8161, Adjusted R-squared: 0.8142

## F-statistic: 434.9 on 1 and 98 DF, p-value: < 2.2e-16The important thing to note here is the \(t\) test associated with the predictor, in which we get a result of \(t(98) = -20.85\), \(p<.001\). Now let’s compare this to the output of a different function, which goes by the name of cor.test(). As you might expect, this function runs a hypothesis test to see if the observed correlation between two variables is significantly different from 0. Let’s have a look:

cor.test( x = parenthood$dan.sleep, y = parenthood$dan.grump )##

## Pearson's product-moment correlation

##

## data: parenthood$dan.sleep and parenthood$dan.grump

## t = -20.854, df = 98, p-value < 2.2e-16

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## -0.9340614 -0.8594714

## sample estimates:

## cor

## -0.903384Again, the key thing to note is the line that reports the hypothesis test itself, which seems to be saying that \(t(98) = -20.85\), \(p<.001\). Hm. Looks like it’s exactly the same test, doesn’t it? And that’s exactly what it is. The test for the significance of a correlation is identical to the \(t\) test that we run on a coefficient in a regression model.

8.10.2 Hypothesis tests for all pairwise correlations

Okay, one more digression before I return to regression properly. In the previous section I talked about the cor.test() function, which lets you run a hypothesis test on a single correlation. The cor.test() function is (obviously) an extension of the cor() function, which we talked about in Section 8.4. However, the cor() function isn’t restricted to computing a single correlation: you can use it to compute all pairwise correlations among the variables in your data set. This leads people to the natural question: can the cor.test() function do the same thing? Can we use cor.test() to run hypothesis tests for all possible parwise correlations among the variables in a data frame?

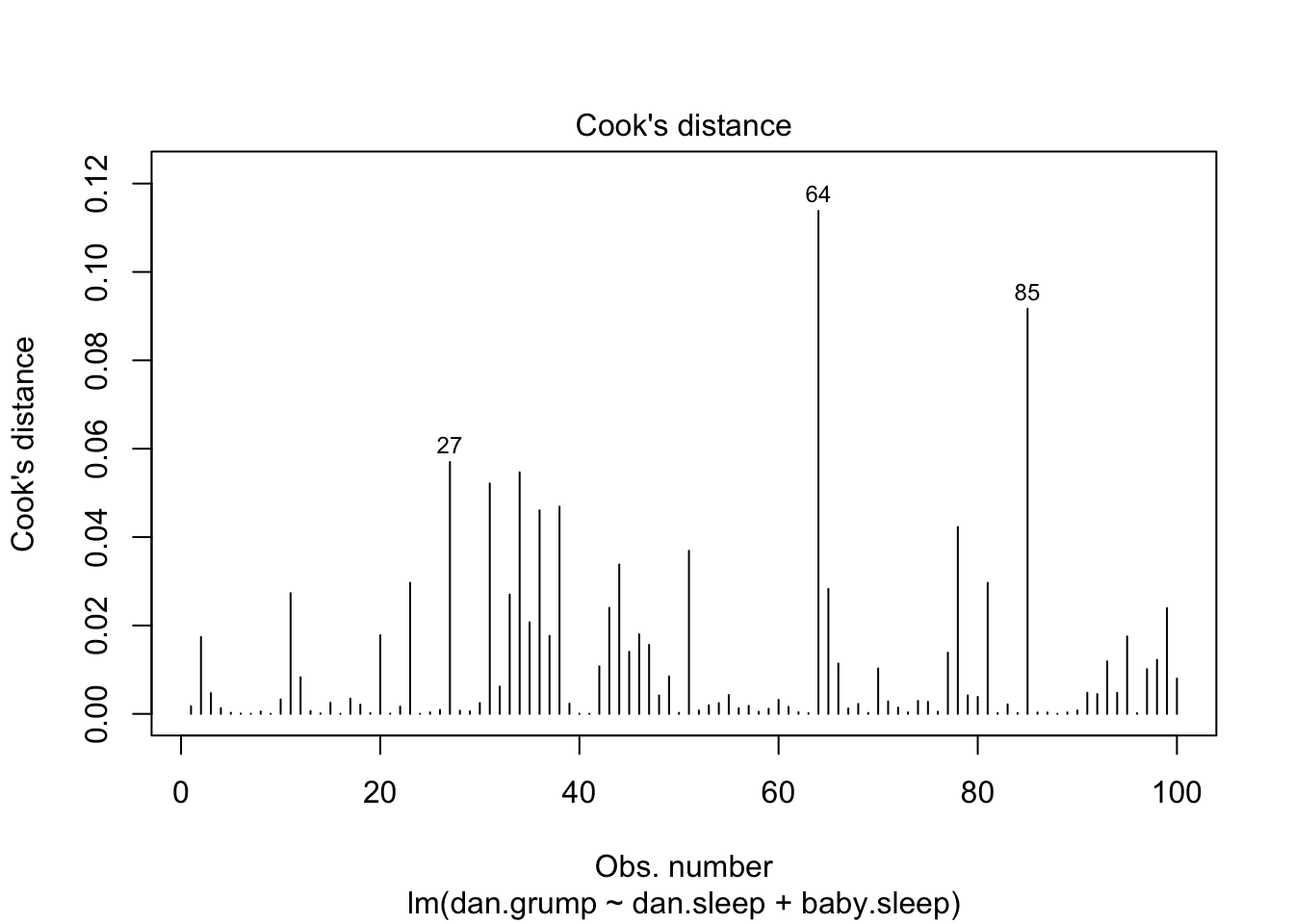

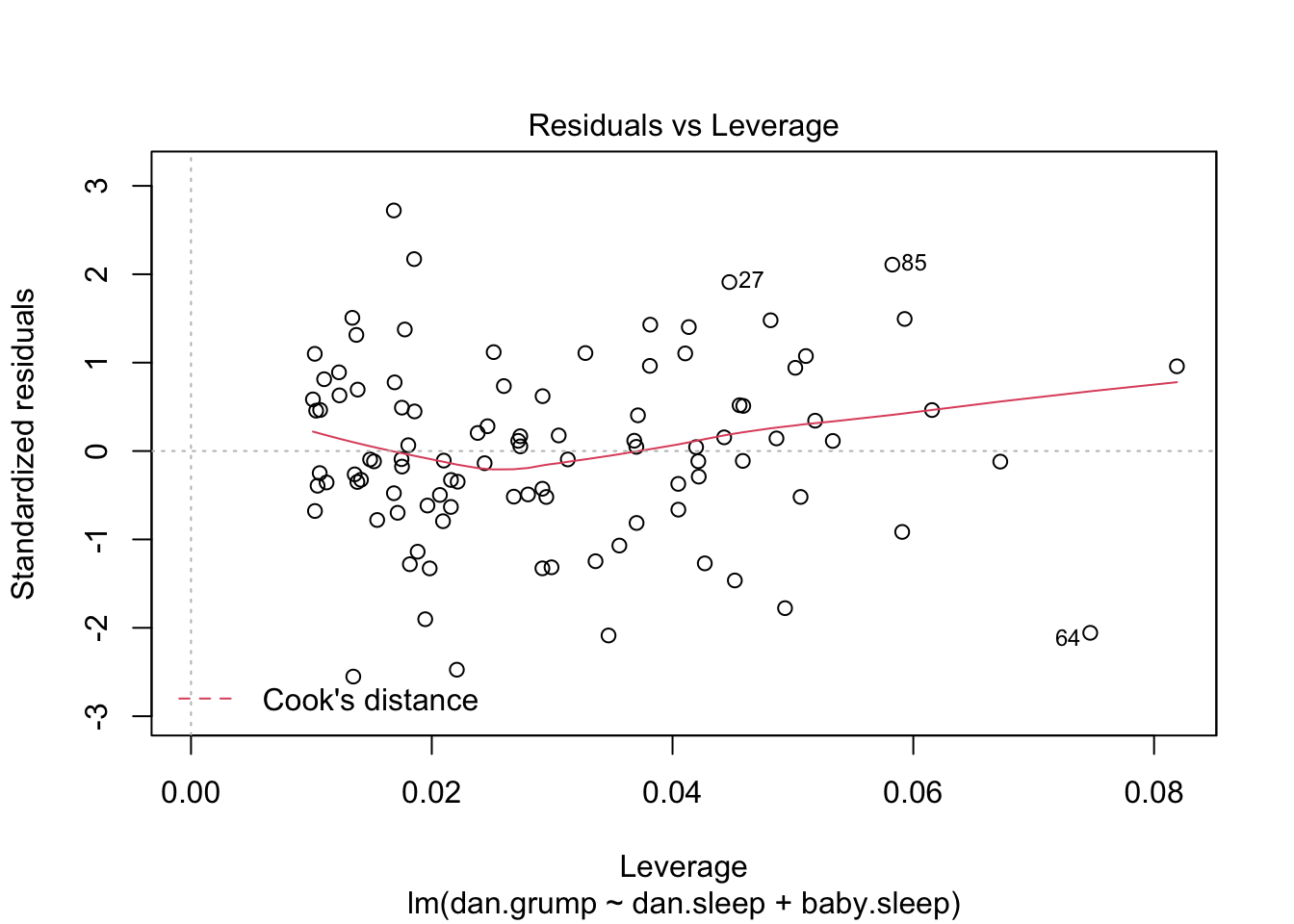

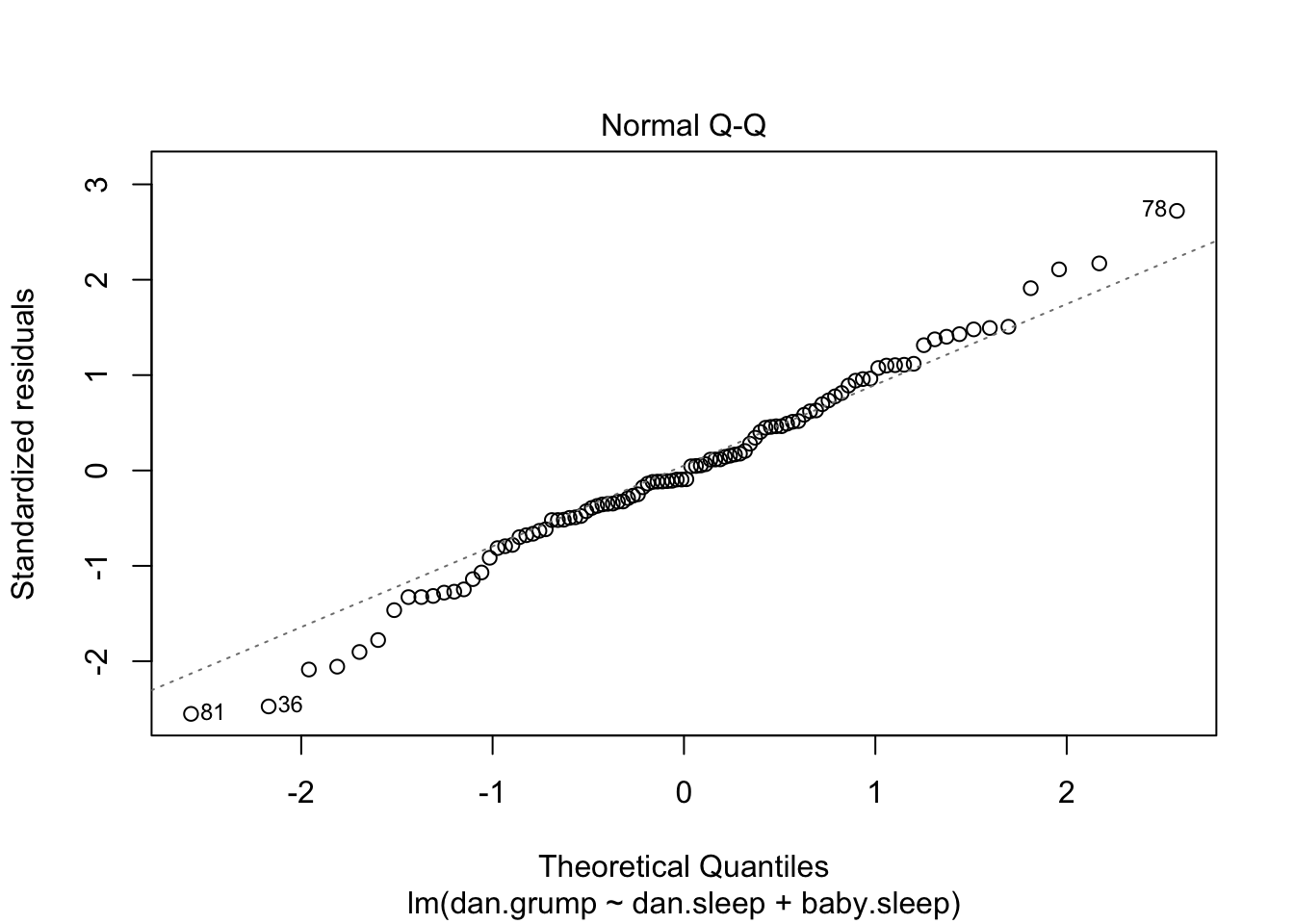

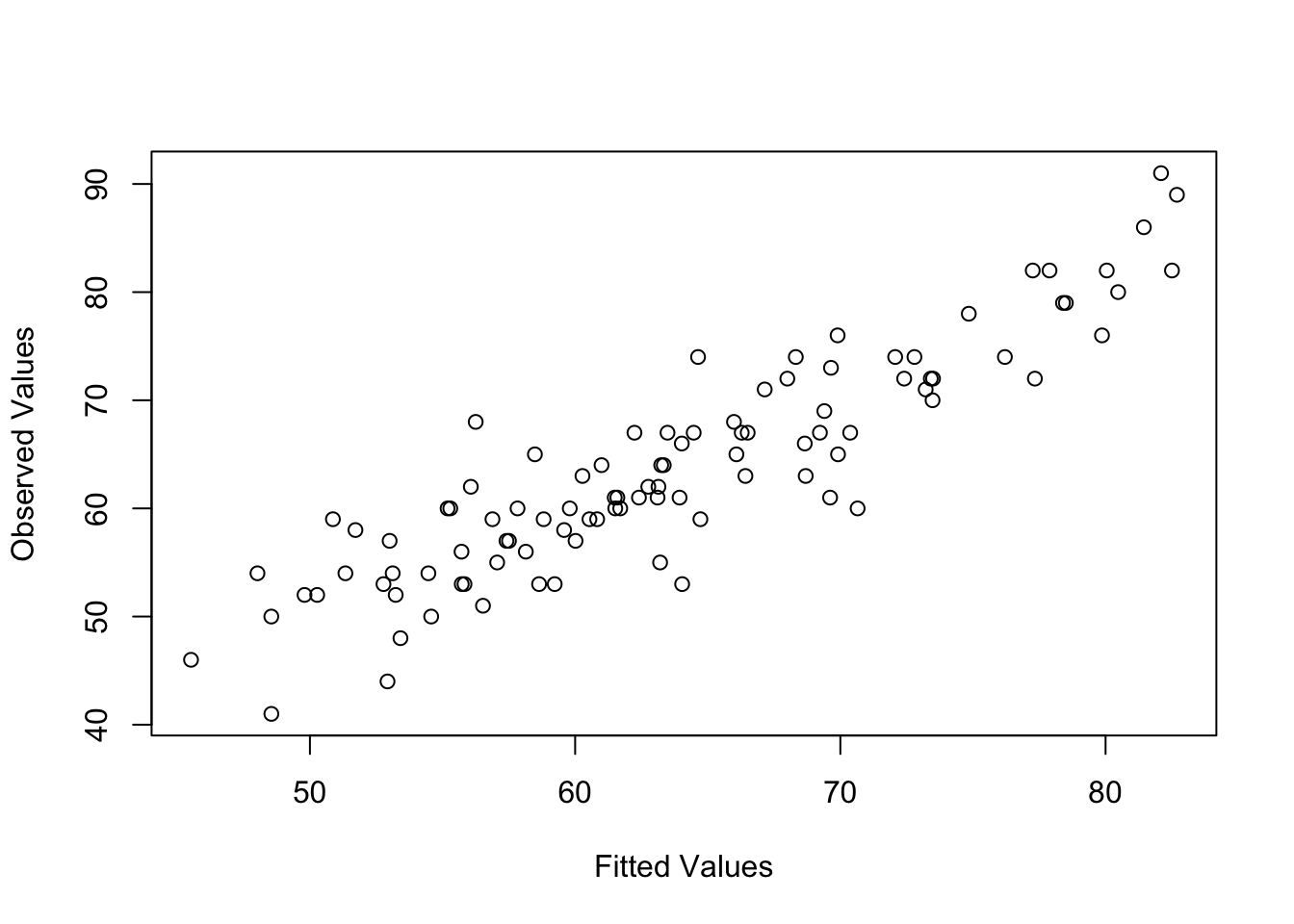

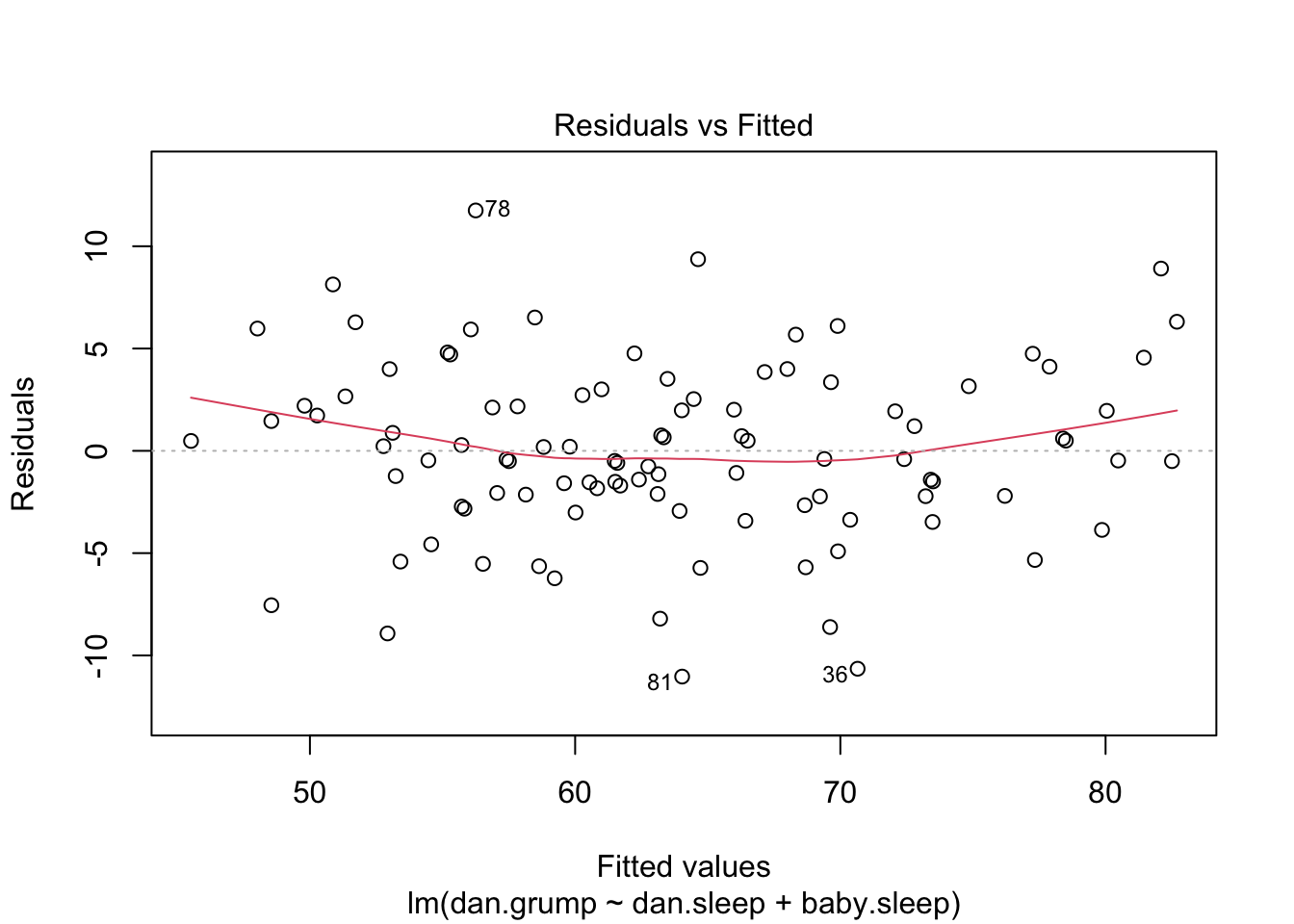

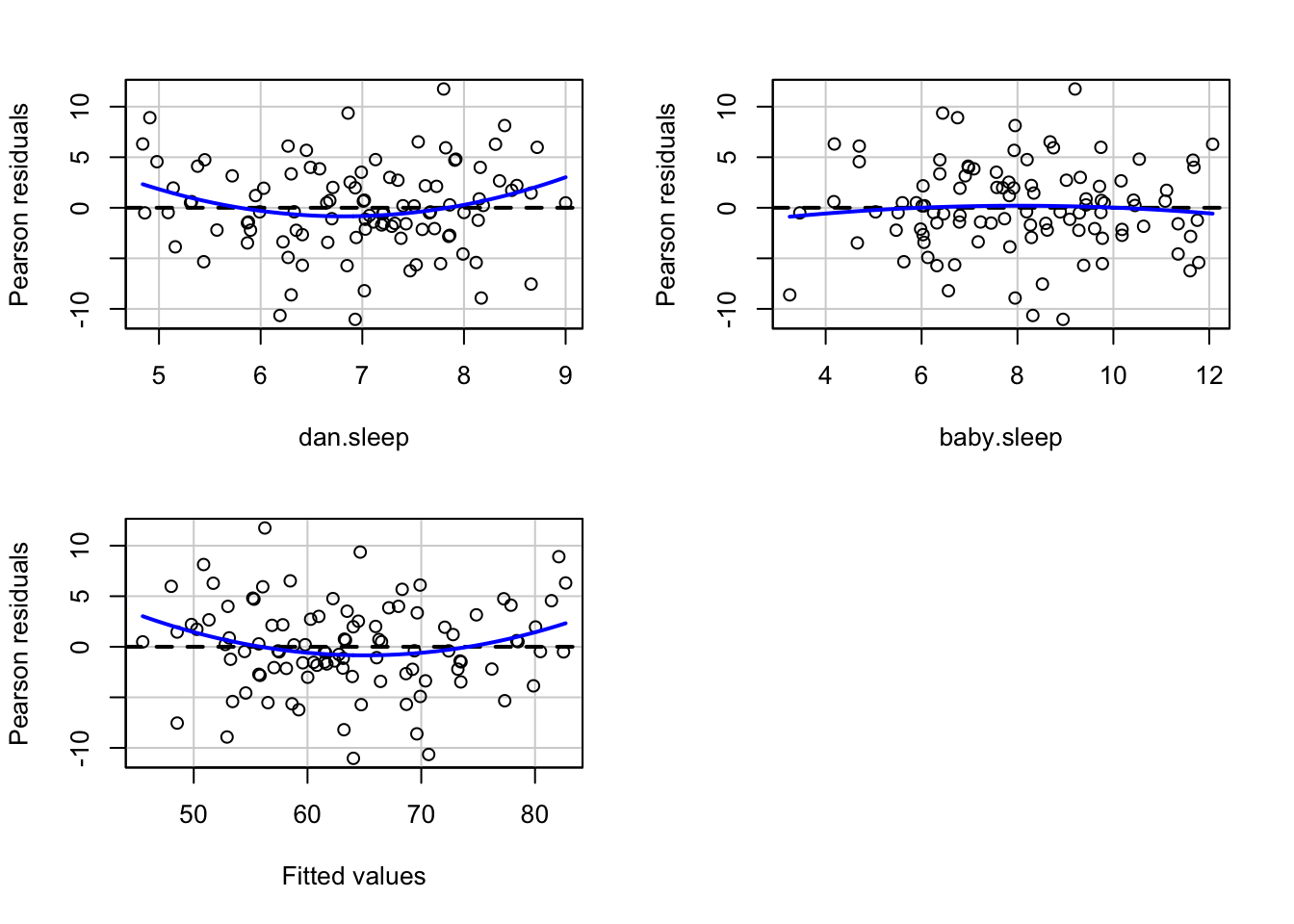

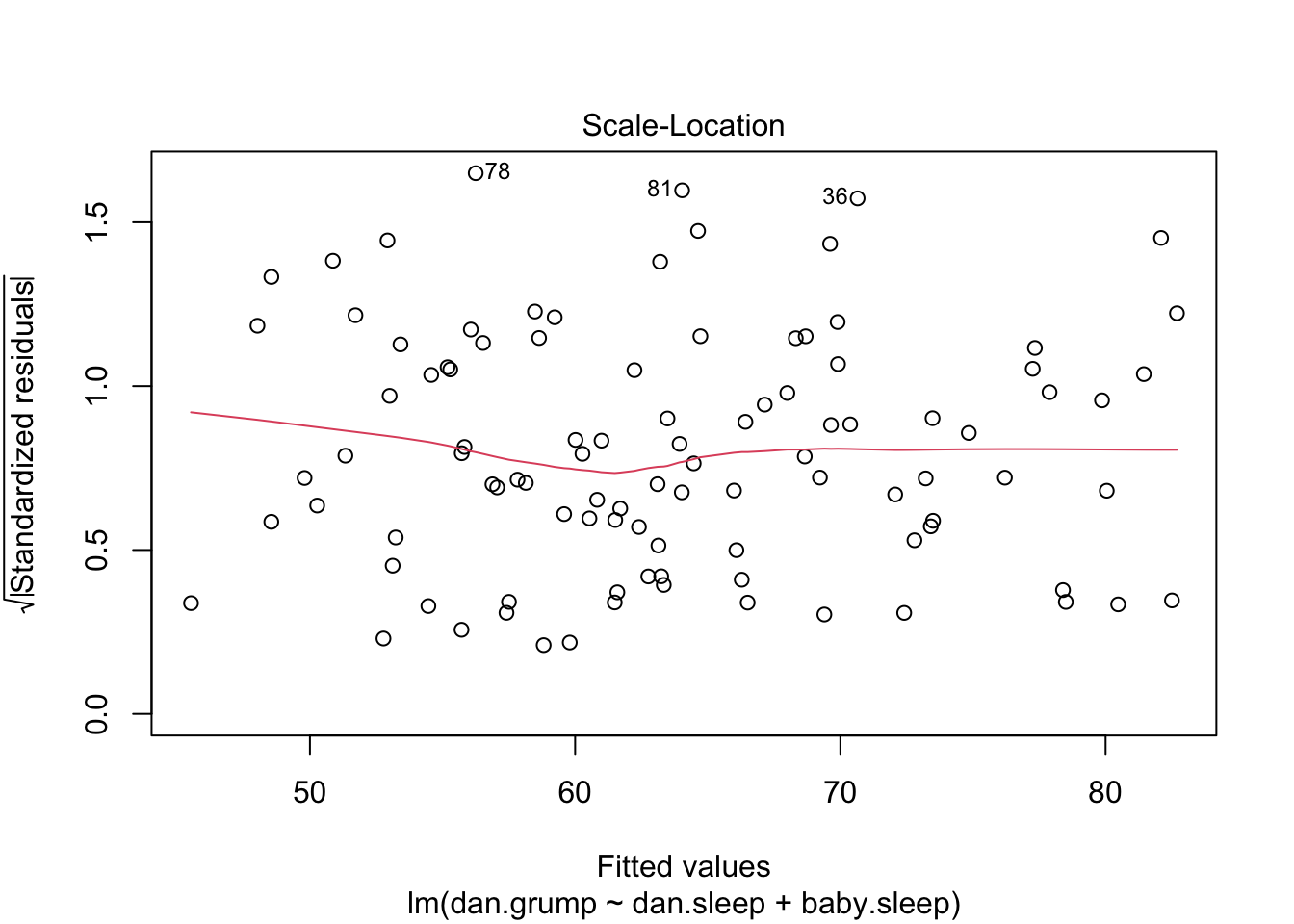

The answer is no, and there’s a very good reason for this. Testing a single correlation is fine: if you’ve got some reason to be asking “is A related to B?” then you should absolutely run a test to see if there’s a significant correlation. But if you’ve got variables A, B, C, D and E and you’re thinking about testing the correlations among all possible pairs of these, a statistician would want to ask: what’s your hypothesis? If you’re in the position of wanting to test all possible pairs of variables, then you’re pretty clearly on a fishing expedition, hunting around in search of significant effects when you don’t actually have a clear research hypothesis in mind. This is dangerous, and the authors of cor.test() obviously felt that they didn’t want to support that kind of behaviour.